Article Structure

Abstract

Negation words, such as no and not, play a fundamental role in modifying sentiment of textual expressions.

Introduction

Morante and Sporleder (2012) define negation to be “a grammatical category that allows the changing of the truth value of a proposition”.

Related work

Automatic sentiment analysis The expression of sentiment is an integral component of human language.

Negation models based on heuristics

We begin with previously proposed methods that leverage heuristics to model the behavior of negators.

Semantics-enriched modeling

Negators can interact with arguments in complex ways.

Experiment setup

Data As described earlier, the Stanford Sentiment Treebank (Socher et al., 2013) has manually annotated, real-valued sentiment values for all phrases in parse trees.

Experimental results

Overall regression performance Table 1 shows the overall fitting performance of all models.

Conclusions

Negation plays a fundamental role in modifying sentiment.

Topics

neural network

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network .Page 1, “Abstract”

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network .Page 2, “Introduction”

- The more recent work of (Socher et al., 2012; Socher et al., 2013) proposed models based on recursive neural networks that do not rely on any heuristic rules.Page 3, “Related work”

- In principle neural network is able to fit very complicated functions (Mitchell, 1997), and in this paper, we adapt the state-of-the-art approach described in (Socher et al., 2013) to help understand the behavior of negators specifically.Page 3, “Related work”

- A recursive neural tensor network (RNTN) is a specific form of feed-forward neural network based on syntactic (phrasal-structure) parse tree to conduct compositional sentiment analysis.Page 4, “Semantics-enriched modeling”

- A major difference of RNTN from the conventional recursive neural network (RRN) (Socher et al., 2012) is the use of the tensor V in order to directly capture the multiplicative interaction of two input vectors, although the matrix W implicitly captures the nonlinear interaction between the input vectors.Page 4, “Semantics-enriched modeling”

- This is actually an interesting place to extend the current recursive neural network to consider extrinsic knowledge.Page 5, “Semantics-enriched modeling”

- Furthermore, modeling the syntax and semantics with the state-of-the-art recursive neural network (model 7 and 8) can dramatically improve the performance over model 6.Page 7, “Experimental results”

- Note that the two neural network based models incorporate the syntax and semantics by representing each node with a vector.Page 7, “Experimental results”

- Note that this is a special case of what the neural network based models can model.Page 7, “Experimental results”

- Modeling syntax and semantics We have seen above that modeling syntax and semantics through the-state-of-the-art neural networks help improve the fitting performance.Page 8, “Experimental results”

See all papers in Proc. ACL 2014 that mention neural network.

See all papers in Proc. ACL that mention neural network.

Back to top.

treebank

- We use a sentiment treebank to show that these existing heuristics are poor estimators of sentiment.Page 1, “Abstract”

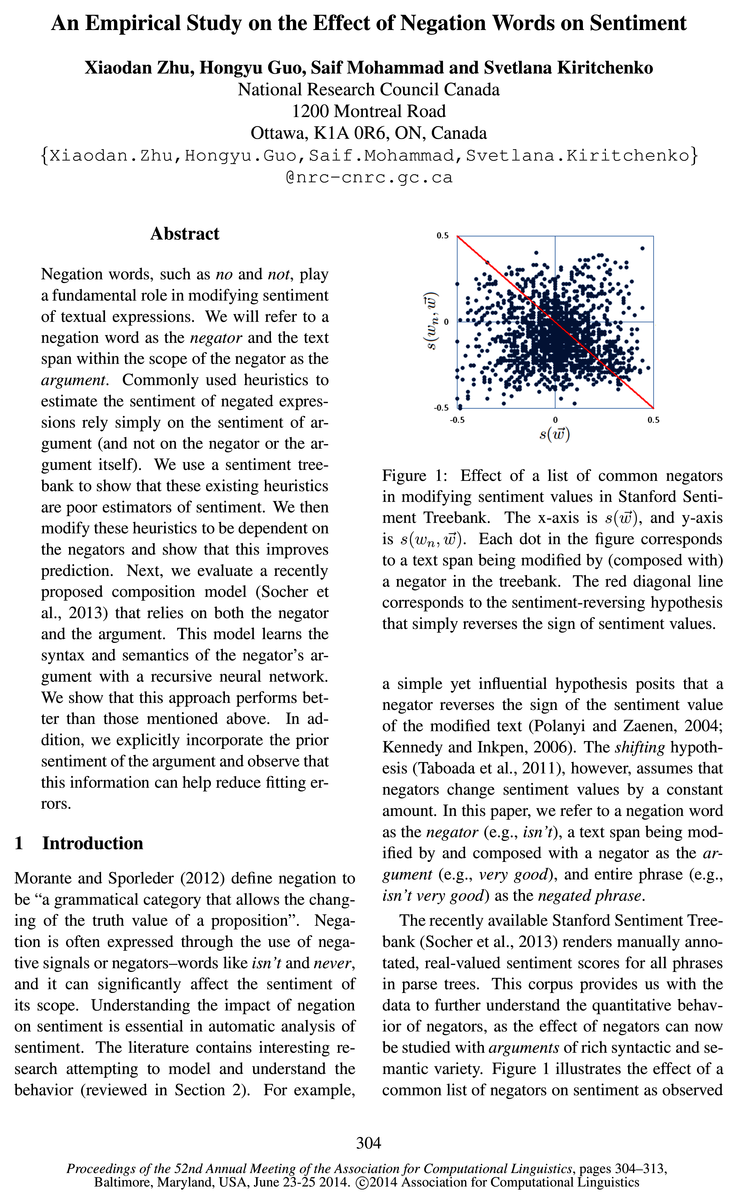

- Figure 1: Effect of a list of common negators in modifying sentiment values in Stanford Sentiment Treebank .Page 1, “Introduction”

- Each dot in the figure corresponds to a text span being modified by (composed with) a negator in the treebank .Page 1, “Introduction”

- The recently available Stanford Sentiment Treebank (Socher et al., 2013) renders manually annotated, real-valued sentiment scores for all phrases in parse trees.Page 1, “Introduction”

- on the Stanford Sentiment Treebank.1 Each dot in the figure corresponds to a negated phrase in the treebank .Page 2, “Introduction”

- Data As described earlier, the Stanford Sentiment Treebank (Socher et al., 2013) has manually annotated, real-valued sentiment values for all phrases in parse trees.Page 6, “Experiment setup”

- We search these negators in the Stanford Sentiment Treebank and normalize the same negators to a single form; e.g., “is n’t”, “isn’t”, and “is not” are all normalized to “is_not”.Page 6, “Experiment setup”

- Each occurrence of a negator and the phrase it is directly composed with in the treebank , i.e., (7,071,217), is considered a data point in our study.Page 6, “Experiment setup”

- Table 1: Mean absolute errors (MAE) of fitting different models to Stanford Sentiment Treebank .Page 7, “Experimental results”

- The figure includes five most frequently used negators found in the sentiment treebank .Page 8, “Experimental results”

- Below, we take a closer look at the fitting errors made at different depths of the sentiment treebank .Page 8, “Experimental results”

See all papers in Proc. ACL 2014 that mention treebank.

See all papers in Proc. ACL that mention treebank.

Back to top.

recursive

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network.Page 1, “Abstract”

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network.Page 2, “Introduction”

- The more recent work of (Socher et al., 2012; Socher et al., 2013) proposed models based on recursive neural networks that do not rely on any heuristic rules.Page 3, “Related work”

- For the former, we adopt the recursive neural tensor network (RNTN) proposed recently by Socher et al.Page 4, “Semantics-enriched modeling”

- 4.1 RNTN: Recursive neural tensor networkPage 4, “Semantics-enriched modeling”

- A recursive neural tensor network (RNTN) is a specific form of feed-forward neural network based on syntactic (phrasal-structure) parse tree to conduct compositional sentiment analysis.Page 4, “Semantics-enriched modeling”

- A major difference of RNTN from the conventional recursive neural network (RRN) (Socher et al., 2012) is the use of the tensor V in order to directly capture the multiplicative interaction of two input vectors, although the matrix W implicitly captures the nonlinear interaction between the input vectors.Page 4, “Semantics-enriched modeling”

- This is actually an interesting place to extend the current recursive neural network to consider extrinsic knowledge.Page 5, “Semantics-enriched modeling”

- Furthermore, modeling the syntax and semantics with the state-of-the-art recursive neural network (model 7 and 8) can dramatically improve the performance over model 6.Page 7, “Experimental results”

- We further make the models to be dependent on the text being modified by negators, through adaptation of a state-of-the-art recursive neural network to incorporate the syntax and semantics of the arguments; we discover this further reduces fitting errors.Page 9, “Conclusions”

See all papers in Proc. ACL 2014 that mention recursive.

See all papers in Proc. ACL that mention recursive.

Back to top.

recursive neural

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network.Page 1, “Abstract”

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network.Page 2, “Introduction”

- The more recent work of (Socher et al., 2012; Socher et al., 2013) proposed models based on recursive neural networks that do not rely on any heuristic rules.Page 3, “Related work”

- For the former, we adopt the recursive neural tensor network (RNTN) proposed recently by Socher et al.Page 4, “Semantics-enriched modeling”

- 4.1 RNTN: Recursive neural tensor networkPage 4, “Semantics-enriched modeling”

- A recursive neural tensor network (RNTN) is a specific form of feed-forward neural network based on syntactic (phrasal-structure) parse tree to conduct compositional sentiment analysis.Page 4, “Semantics-enriched modeling”

- A major difference of RNTN from the conventional recursive neural network (RRN) (Socher et al., 2012) is the use of the tensor V in order to directly capture the multiplicative interaction of two input vectors, although the matrix W implicitly captures the nonlinear interaction between the input vectors.Page 4, “Semantics-enriched modeling”

- This is actually an interesting place to extend the current recursive neural network to consider extrinsic knowledge.Page 5, “Semantics-enriched modeling”

- Furthermore, modeling the syntax and semantics with the state-of-the-art recursive neural network (model 7 and 8) can dramatically improve the performance over model 6.Page 7, “Experimental results”

- We further make the models to be dependent on the text being modified by negators, through adaptation of a state-of-the-art recursive neural network to incorporate the syntax and semantics of the arguments; we discover this further reduces fitting errors.Page 9, “Conclusions”

See all papers in Proc. ACL 2014 that mention recursive neural.

See all papers in Proc. ACL that mention recursive neural.

Back to top.

recursive neural network

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network .Page 1, “Abstract”

- This model learns the syntax and semantics of the negator’s argument with a recursive neural network .Page 2, “Introduction”

- The more recent work of (Socher et al., 2012; Socher et al., 2013) proposed models based on recursive neural networks that do not rely on any heuristic rules.Page 3, “Related work”

- A major difference of RNTN from the conventional recursive neural network (RRN) (Socher et al., 2012) is the use of the tensor V in order to directly capture the multiplicative interaction of two input vectors, although the matrix W implicitly captures the nonlinear interaction between the input vectors.Page 4, “Semantics-enriched modeling”

- This is actually an interesting place to extend the current recursive neural network to consider extrinsic knowledge.Page 5, “Semantics-enriched modeling”

- Furthermore, modeling the syntax and semantics with the state-of-the-art recursive neural network (model 7 and 8) can dramatically improve the performance over model 6.Page 7, “Experimental results”

- We further make the models to be dependent on the text being modified by negators, through adaptation of a state-of-the-art recursive neural network to incorporate the syntax and semantics of the arguments; we discover this further reduces fitting errors.Page 9, “Conclusions”

See all papers in Proc. ACL 2014 that mention recursive neural network.

See all papers in Proc. ACL that mention recursive neural network.

Back to top.

sentiment analysis

- Automatic sentiment analysis The expression of sentiment is an integral component of human language.Page 2, “Related work”

- Early work on automatic sentiment analysis includes the Widely cited work of (Hatzivas-siloglou and McKeown, 1997; Pang et al., 2002; Turney, 2002), among others.Page 2, “Related work”

- Much recent work considers sentiment analysis from a semantic-composition perspective (Moilanen and Pulman, 2007; Choi and Cardie, 2008; Socher et al., 2012; Socher et al., 2013), which achieved the state-of-the-art performance.Page 3, “Related work”

- (2013), which has showed to achieve the state-of-the-art performance in sentiment analysis .Page 4, “Semantics-enriched modeling”

- A recursive neural tensor network (RNTN) is a specific form of feed-forward neural network based on syntactic (phrasal-structure) parse tree to conduct compositional sentiment analysis .Page 4, “Semantics-enriched modeling”

- Figure 2: Prior sentiment-enriched tensor network (PSTN) model for sentiment analysis .Page 4, “Semantics-enriched modeling”

- In general, we argue that one should always consider modeling negators individually in a sentiment analysis system.Page 8, “Experimental results”

See all papers in Proc. ACL 2014 that mention sentiment analysis.

See all papers in Proc. ACL that mention sentiment analysis.

Back to top.

statistically significantly

- We will show that this simple modification improves the fitting performance statistically significantly .Page 3, “Negation models based on heuristics”

- We will show that this model also statistically significantly outperforms the basic shifting without overfitting, although the number of parameters have increased.Page 3, “Negation models based on heuristics”

- Models marked with an asterisk (*) are statistically significantly better than the random baseline.Page 7, “Experimental results”

- Double asterisks ** indicates a statistically significantly different from model (6), and the model with the double dagger His significantly better than model (7).Page 7, “Experimental results”

- We can observe statistically significant differences of shifting abilities between many negator pairs such as that between “is_never” and “do_not” as well as between “does_not” and “cangot”.Page 7, “Experimental results”

- formance statistically significantly .Page 9, “Conclusions”

See all papers in Proc. ACL 2014 that mention statistically significantly.

See all papers in Proc. ACL that mention statistically significantly.

Back to top.

parse tree

- The recently available Stanford Sentiment Treebank (Socher et al., 2013) renders manually annotated, real-valued sentiment scores for all phrases in parse trees .Page 1, “Introduction”

- Such models work in a bottom-up fashion over the parse tree of a sentence to infer the sentiment label of the sentence as a composition of the sentiment expressed by its constituting parts.Page 3, “Related work”

- A recursive neural tensor network (RNTN) is a specific form of feed-forward neural network based on syntactic (phrasal-structure) parse tree to conduct compositional sentiment analysis.Page 4, “Semantics-enriched modeling”

- Each node of the parse tree is a fixed-length vector that encodes compositional semantics and syntax, which can be used to predict the sentiment of this node.Page 4, “Semantics-enriched modeling”

- Data As described earlier, the Stanford Sentiment Treebank (Socher et al., 2013) has manually annotated, real-valued sentiment values for all phrases in parse trees .Page 6, “Experiment setup”

See all papers in Proc. ACL 2014 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

human annotated

- We then extend the models to be dependent on the negators and demonstrate that such a simple extension can significantly improve the performance of fitting to the human annotated data.Page 2, “Introduction”

- As we have discussed above, we will use the human annotated sentiment for the arguments, same as in the models discussed in Section 3.Page 5, “Semantics-enriched modeling”

- When the depths are within 4, the RNTN performs very well and the ( human annotated ) prior sentiment of arguments used in PSTN does not bring additional improvement over RNTN.Page 8, “Experimental results”

- This paper provides a comprehensive and quantitative study of the behavior of negators through a unified view of fitting human annotation .Page 8, “Conclusions”

See all papers in Proc. ACL 2014 that mention human annotated.

See all papers in Proc. ACL that mention human annotated.

Back to top.

manually annotated

- The recently available Stanford Sentiment Treebank (Socher et al., 2013) renders manually annotated , real-valued sentiment scores for all phrases in parse trees.Page 1, “Introduction”

- Data As described earlier, the Stanford Sentiment Treebank (Socher et al., 2013) has manually annotated , real-valued sentiment values for all phrases in parse trees.Page 6, “Experiment setup”

- The phrases at all tree nodes were manually annotated with one of 25 sentiment values that uniformly span between the positive and negative poles.Page 6, “Experiment setup”

See all papers in Proc. ACL 2014 that mention manually annotated.

See all papers in Proc. ACL that mention manually annotated.

Back to top.