Article Structure

Abstract

In this paper, we propose a bigram based supervised method for extractive document summarization in the integer linear programming (ILP) framework.

Introduction

Extractive summarization is a sentence selection problem: identifying important summary sentences from one or multiple documents.

Proposed Method 2.1 Bigram Gain Maximization by ILP

We choose bigrams as the language concepts in our proposed method since they have been successfully used in previous work.

Experiments

3.1 Data

Experiment and Analysis

4.1 Experimental Results

Related Work

We briefly describe some prior work on summarization in this section.

Conclusion and Future Work

In this paper, we leverage the ILP method as a core component in our summarization system.

Topics

bigrams

- In this paper, we propose a bigram based supervised method for extractive document summarization in the integer linear programming (ILP) framework.Page 1, “Abstract”

- For each bigram , a regression model is used to estimate its frequency in the reference summary.Page 1, “Abstract”

- The regression model uses a variety of indicative features and is trained discriminatively to minimize the distance between the estimated and the ground truth bigram frequency in the reference summary.Page 1, “Abstract”

- During testing, the sentence selection problem is formulated as an ILP problem to maximize the bigram gains.Page 1, “Abstract”

- We also conducted various analysis to show the impact of bigram selection, weight estimation, and ILP setup.Page 1, “Abstract”

- They used bigrams as such language concepts.Page 1, “Introduction”

- Gillick and Favre (Gillick and Favre, 2009) used bigrams as concepts, which are selected from a subset of the sentences, and their document frequency as the weight in the objective function.Page 1, “Introduction”

- In this paper, we propose to find a candidate summary such that the language concepts (e. g., bigrams ) in this candidate summary and the reference summary can have the same frequency.Page 1, “Introduction”

- To estimate the bigram frequency in the summary, we propose to use a supervised regression model that is discriminatively trained using a variety of features.Page 2, “Introduction”

- We choose bigrams as the language concepts in our proposed method since they have been successfully used in previous work.Page 2, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- In addition, we expect that the bigram oriented ILP is consistent with the ROUGE-2 measure widely used for summarization evaluation.Page 2, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

See all papers in Proc. ACL 2013 that mention bigrams.

See all papers in Proc. ACL that mention bigrams.

Back to top.

ILP

- In this paper, we propose a bigram based supervised method for extractive document summarization in the integer linear programming ( ILP ) framework.Page 1, “Abstract”

- During testing, the sentence selection problem is formulated as an ILP problem to maximize the bigram gains.Page 1, “Abstract”

- We demonstrate that our system consistently outperforms the previous ILP method on different TAC data sets, and performs competitively compared to the best results in the TAC evaluations.Page 1, “Abstract”

- We also conducted various analysis to show the impact of bigram selection, weight estimation, and ILP setup.Page 1, “Abstract”

- Many methods have been developed for this problem, including supervised approaches that use classifiers to predict summary sentences, graph based approaches to rank the sentences, and recent global optimization methods such as integer linear programming ( ILP ) and submodular methods.Page 1, “Introduction”

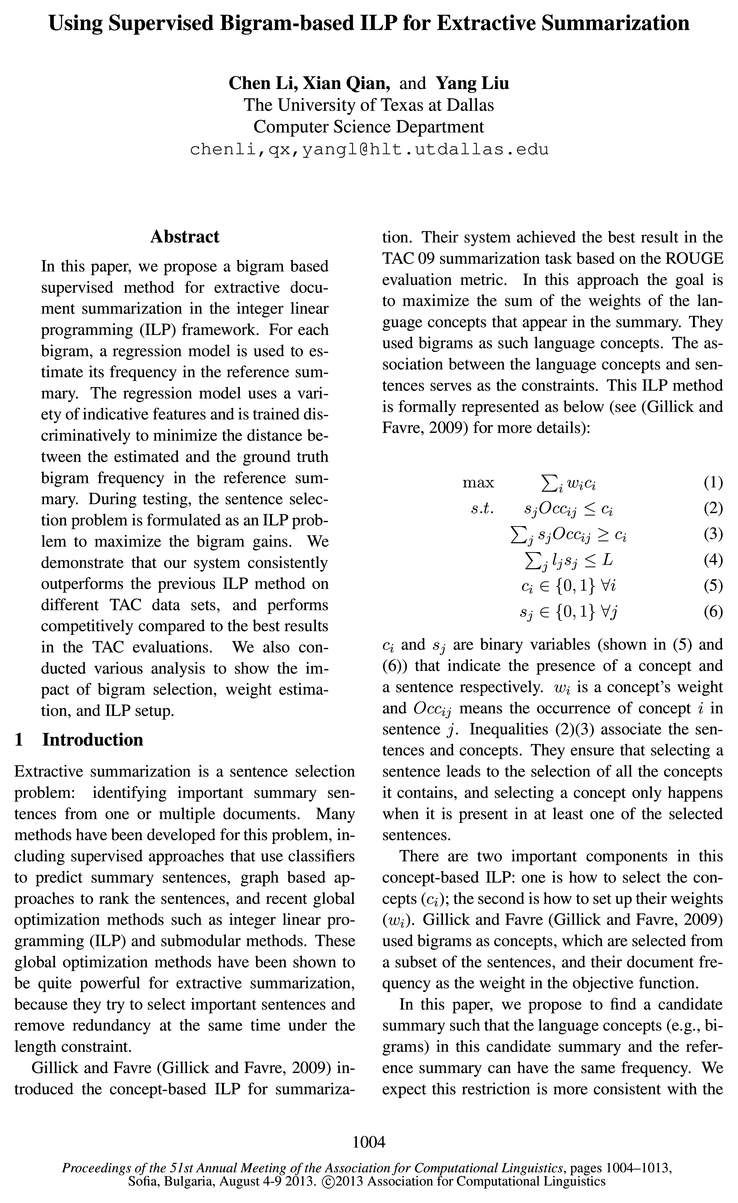

- Gillick and Favre (Gillick and Favre, 2009) introduced the concept-based ILP for summariza-Page 1, “Introduction”

- This ILP method is formally represented as below (see (Gillick and Favre, 2009) for more details):Page 1, “Introduction”

- There are two important components in this concept-based ILP : one is how to select the concepts (Ci); the second is how to set up their weights (wi).Page 1, “Introduction”

- In addition, in the previous concept-based ILP method, the constraints are with respect to the appearance of language concepts, hence it cannot distinguish the importance of different language concepts in the reference summary.Page 2, “Introduction”

- Our method can decide not only which language concepts to use in ILP , but also the frequency of these language concepts in the candidate summary.Page 2, “Introduction”

- Our experiments on several TAC summarization data sets demonstrate this proposed method outperforms the previous ILP system and often the best performing TAC system.Page 2, “Introduction”

See all papers in Proc. ACL 2013 that mention ILP.

See all papers in Proc. ACL that mention ILP.

Back to top.

regression model

- For each bigram, a regression model is used to estimate its frequency in the reference summary.Page 1, “Abstract”

- The regression model uses a variety of indicative features and is trained discriminatively to minimize the distance between the estimated and the ground truth bigram frequency in the reference summary.Page 1, “Abstract”

- To estimate the bigram frequency in the summary, we propose to use a supervised regression model that is discriminatively trained using a variety of features.Page 2, “Introduction”

- 2.2 Regression Model for Bigram Frequency EstimationPage 2, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- We propose to use a regression model for this.Page 2, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- To train this regression model using the given reference abstractive summaries, rather than trying to minimize the squared error as typically done, we propose a new objective function.Page 3, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- Each bigram is represented using a set of features in the above regression model .Page 3, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- In our method, we first extract all the bigrams from the selected sentences and then estimate each bigram’s N We f using the regression model .Page 4, “Experiments”

- When training our bigram regression model , we use each of the 4 reference summaries separately, i.e., the bigram frequency is obtained from one reference summary.Page 4, “Experiments”

- We used the estimated value from the regression model ; the ICSI system just uses the bigram’s document frequency in the original text as weight.Page 4, “Experiment and Analysis”

- # bigrams used in our regression model 2140.7 (i.e., in selected sentences)Page 5, “Experiment and Analysis”

See all papers in Proc. ACL 2013 that mention regression model.

See all papers in Proc. ACL that mention regression model.

Back to top.

objective function

- Gillick and Favre (Gillick and Favre, 2009) used bigrams as concepts, which are selected from a subset of the sentences, and their document frequency as the weight in the objective function .Page 1, “Introduction”

- where 0;, is an auxiliary variable we introduce that is equal to |nbflaef — :8 2(3) * 715,8 , and nbyef is a constant that can be dropped from the objective function .Page 2, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- To train this regression model using the given reference abstractive summaries, rather than trying to minimize the squared error as typically done, we propose a new objective function .Page 3, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- The objective function for training is thus to minimize the KL distance:Page 3, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- Finally, we replace Nb,ref in Formula (15) with Eq (14) and get the objective function below:Page 3, “Proposed Method 2.1 Bigram Gain Maximization by ILP”

- They used a modified objective function in order to consider whether the selected sentence is globally optimal.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention objective function.

See all papers in Proc. ACL that mention objective function.

Back to top.