Article Structure

Abstract

Natural language parsing has typically been done with small sets of discrete categories such as NP and VP, but this representation does not capture the full syntactic nor semantic richness of linguistic phrases, and attempts to improve on this by lexicalizing phrases or splitting categories only partly address the problem at the cost of huge feature spaces and sparseness.

Introduction

Syntactic parsing is a central task in natural language processing because of its importance in mediating between linguistic expression and meaning.

Topics

neural network

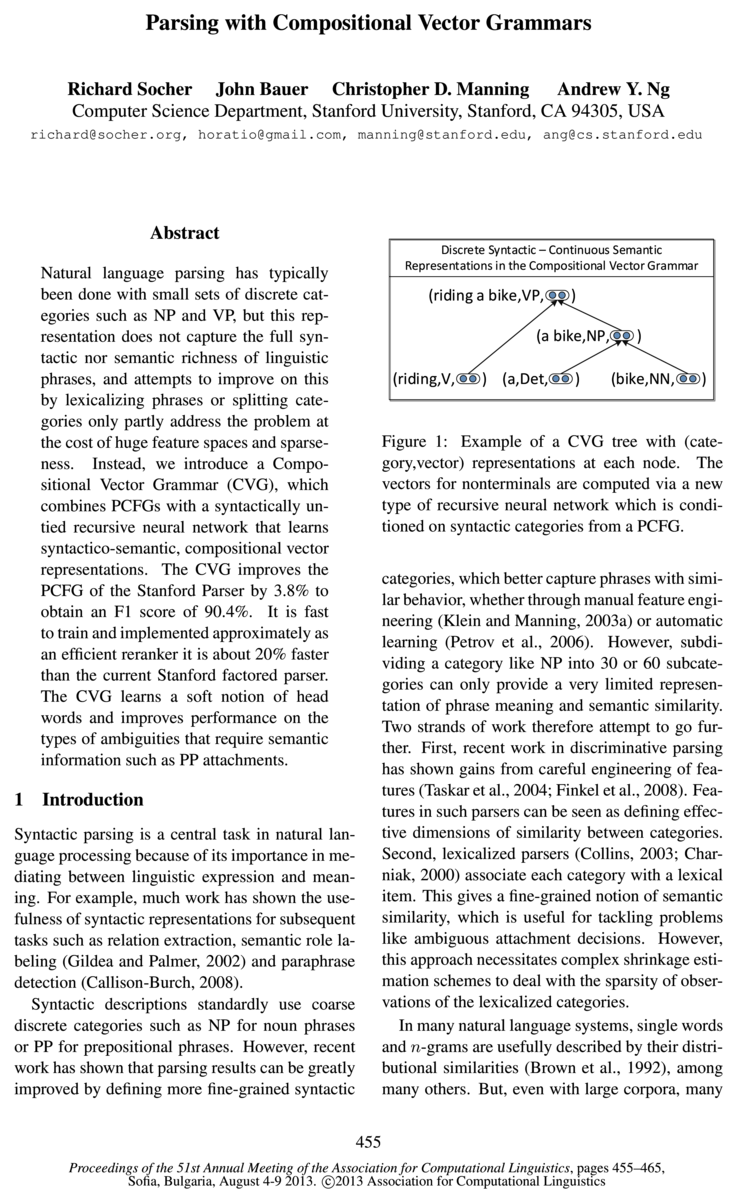

- Instead, we introduce a Compositional Vector Grammar (CVG), which combines PCFGs with a syntactically untied recursive neural network that learns syntactico-semantic, compositional vector representations.Page 1, “Abstract”

- The vectors for nonterminals are computed via a new type of recursive neural network which is conditioned on syntactic categories from a PCFG.Page 1, “Introduction”

- l. CVGs combine the advantages of standard probabilistic context free grammars (PCFG) with those of recursive neural networks (RNNs).Page 2, “Introduction”

- This requires the composition function to be extremely powerful, since it has to combine phrases with different syntactic head words, and it is hard to optimize since the parameters form a very deep neural network .Page 2, “Introduction”

- Deep Learning and Recursive Deep Learning Early attempts at using neural networks to describe phrases include Elman (1991), who used recurrent neural networks to create representations of sentences from a simple toy grammar and to analyze the linguistic expressiveness of the resulting representations.Page 2, “Introduction”

- Collobert and Weston (2008) showed that neural networks can perform well on sequence labeling language processing tasks while also learning appropriate features.Page 2, “Introduction”

- Henderson (2003) was the first to show that neural networks can be successfully used for large scale parsing.Page 3, “Introduction”

- (2003) apply recursive neural networks to re-rank possible phrase attachments in an incremental parser.Page 3, “Introduction”

- The idea is to construct a neural network that outputs high scores for windows that occur in a large unlabeled corpus and low scores for windows where one word is replaced by a random word.Page 3, “Introduction”

- The standard RNN essentially ignores all POS tags and syntactic categories and each nonterminal node is associated with the same neural network (i.e., the weights across nodes are fully tied).Page 4, “Introduction”

- Note that in order to replicate the neural network and compute node representations in a bottom up fashion, the parent must have the same dimensionality as the children: p E R”.Page 4, “Introduction”

See all papers in Proc. ACL 2013 that mention neural network.

See all papers in Proc. ACL that mention neural network.

Back to top.

recursive

- Instead, we introduce a Compositional Vector Grammar (CVG), which combines PCFGs with a syntactically untied recursive neural network that learns syntactico-semantic, compositional vector representations.Page 1, “Abstract”

- The vectors for nonterminals are computed via a new type of recursive neural network which is conditioned on syntactic categories from a PCFG.Page 1, “Introduction”

- l. CVGs combine the advantages of standard probabilistic context free grammars (PCFG) with those of recursive neural networks (RNNs).Page 2, “Introduction”

- sets of discrete states and recursive deep learning models that jointly learn classifiers and continuous feature representations for variable-sized inputs.Page 2, “Introduction”

- Deep Learning and Recursive Deep Learning Early attempts at using neural networks to describe phrases include Elman (1991), who used recurrent neural networks to create representations of sentences from a simple toy grammar and to analyze the linguistic expressiveness of the resulting representations.Page 2, “Introduction”

- However, their model is lacking in that it cannot represent the recursive structure inherent in natural language.Page 2, “Introduction”

- (2003) apply recursive neural networks to re-rank possible phrase attachments in an incremental parser.Page 3, “Introduction”

- Standard Recursive Neural NetworkPage 5, “Introduction”

- Figure 2: An example tree with a simple Recursive Neural Network: The same weight matrix is replicated and used to compute all nonterminal node representations.Page 5, “Introduction”

- Syntactically Untied Recursive Neural NetworkPage 5, “Introduction”

- The compositional vectors are learned with a new syntactically untied recursive neural network.Page 9, “Introduction”

See all papers in Proc. ACL 2013 that mention recursive.

See all papers in Proc. ACL that mention recursive.

Back to top.

vector representations

- Instead, we introduce a Compositional Vector Grammar (CVG), which combines PCFGs with a syntactically untied recursive neural network that learns syntactico-semantic, compositional vector representations .Page 1, “Abstract”

- Previous RNN-based parsers used the same (tied) weights at all nodes to compute the vector representing a constituent (Socher et al., 2011b).Page 2, “Introduction”

- Therefore we combine syntactic and semantic information by giving the parser access to rich syntactico-semantic information in the form of distributional word vectors and compute compositional semantic vector representations for longer phrases (Costa et al., 2003; Menchetti et al., 2005; Socher et al., 2011b).Page 3, “Introduction”

- We will first briefly introduce single word vector representations and then describe the CVG objective function, tree scoring and inference.Page 3, “Introduction”

- 3.1 Word Vector RepresentationsPage 3, “Introduction”

- In most systems that use a vector representation for words, such vectors are based on co-occurrence statistics of each word and its context (Turney and Pantel, 2010).Page 3, “Introduction”

- These vector representations capture interesting linear relationships (up to some accuracy), such as king—man+w0man % queen (Mikolov et al., 2013).Page 3, “Introduction”

- This index is used to retrieve the word’s vector representation aw using a simple multiplication with a binary vector 6, which is zero everywhere, exceptPage 3, “Introduction”

- Leaf nodes are n-dimensional vector representations of words.Page 5, “Introduction”

See all papers in Proc. ACL 2013 that mention vector representations.

See all papers in Proc. ACL that mention vector representations.

Back to top.

recursive neural

- Instead, we introduce a Compositional Vector Grammar (CVG), which combines PCFGs with a syntactically untied recursive neural network that learns syntactico-semantic, compositional vector representations.Page 1, “Abstract”

- The vectors for nonterminals are computed via a new type of recursive neural network which is conditioned on syntactic categories from a PCFG.Page 1, “Introduction”

- l. CVGs combine the advantages of standard probabilistic context free grammars (PCFG) with those of recursive neural networks (RNNs).Page 2, “Introduction”

- (2003) apply recursive neural networks to re-rank possible phrase attachments in an incremental parser.Page 3, “Introduction”

- Standard Recursive Neural NetworkPage 5, “Introduction”

- Figure 2: An example tree with a simple Recursive Neural Network: The same weight matrix is replicated and used to compute all nonterminal node representations.Page 5, “Introduction”

- Syntactically Untied Recursive Neural NetworkPage 5, “Introduction”

- The compositional vectors are learned with a new syntactically untied recursive neural network.Page 9, “Introduction”

See all papers in Proc. ACL 2013 that mention recursive neural.

See all papers in Proc. ACL that mention recursive neural.

Back to top.

Recursive Neural Network

- Instead, we introduce a Compositional Vector Grammar (CVG), which combines PCFGs with a syntactically untied recursive neural network that learns syntactico-semantic, compositional vector representations.Page 1, “Abstract”

- The vectors for nonterminals are computed via a new type of recursive neural network which is conditioned on syntactic categories from a PCFG.Page 1, “Introduction”

- l. CVGs combine the advantages of standard probabilistic context free grammars (PCFG) with those of recursive neural networks (RNNs).Page 2, “Introduction”

- (2003) apply recursive neural networks to re-rank possible phrase attachments in an incremental parser.Page 3, “Introduction”

- Standard Recursive Neural NetworkPage 5, “Introduction”

- Figure 2: An example tree with a simple Recursive Neural Network : The same weight matrix is replicated and used to compute all nonterminal node representations.Page 5, “Introduction”

- Syntactically Untied Recursive Neural NetworkPage 5, “Introduction”

- The compositional vectors are learned with a new syntactically untied recursive neural network .Page 9, “Introduction”

See all papers in Proc. ACL 2013 that mention Recursive Neural Network.

See all papers in Proc. ACL that mention Recursive Neural Network.

Back to top.

beam search

- The subsequent section will then describe a bottom-up beam search and its approximation for finding the optimal tree.Page 4, “Introduction”

- One could use a bottom-up beam search , keeping a k-best list at every cell of the chart, possibly for each syntactic category.Page 6, “Introduction”

- This beam search inference procedure is still considerably slower than using only the simplified base PCFG, especially since it has a small state space (see next section forPage 6, “Introduction”

- Then, the second pass is a beam search with the full CVG model (including the more expensive matrix multiplications of the SU-RNN).Page 6, “Introduction”

- This beam search only considers phrases that appear in the top 200 parses.Page 6, “Introduction”

See all papers in Proc. ACL 2013 that mention beam search.

See all papers in Proc. ACL that mention beam search.

Back to top.

highest scoring

- This max-margin, structure-prediction objective (Taskar et al., 2004; Ratliff et al., 2007; Socher et al., 2011b) trains the CVG so that the highest scoring tree will be the correct tree: 9905,) = yi and its score will be larger up to a margin to other possible trees 3) 6 32cm):Page 4, “Introduction”

- Intuitively, to minimize this objective, the score of the correct tree 3/,- is increased and the score of the highest scoring incorrect tree 3) is decreased.Page 4, “Introduction”

- This score will be used to find the highest scoring tree.Page 5, “Introduction”

- To minimize the objective we want to increase the scores of the correct tree’s constituents and decrease the score of those in the highest scoring incorrect tree.Page 6, “Introduction”

- where gmax is the tree with the highest score .Page 7, “Introduction”

See all papers in Proc. ACL 2013 that mention highest scoring.

See all papers in Proc. ACL that mention highest scoring.

Back to top.

fine-grained

- However, recent work has shown that parsing results can be greatly improved by defining more fine-grained syntacticPage 1, “Introduction”

- This gives a fine-grained notion of semantic similarity, which is useful for tackling problems like ambiguous attachment decisions.Page 1, “Introduction”

- The former can capture the discrete categorization of phrases into NP or PP while the latter can capture fine-grained syntactic and compositional-semantic information on phrases and words.Page 2, “Introduction”

- However, many parsing decisions show fine-grained semantic factors at work.Page 3, “Introduction”

See all papers in Proc. ACL 2013 that mention fine-grained.

See all papers in Proc. ACL that mention fine-grained.

Back to top.

lexicalized

- Natural language parsing has typically been done with small sets of discrete categories such as NP and VP, but this representation does not capture the full syntactic nor semantic richness of linguistic phrases, and attempts to improve on this by lexicalizing phrases or splitting categories only partly address the problem at the cost of huge feature spaces and sparseness.Page 1, “Abstract”

- Second, lexicalized parsers (Collins, 2003; Charniak, 2000) associate each category with a lexical item.Page 1, “Introduction”

- However, this approach necessitates complex shrinkage estimation schemes to deal with the sparsity of observations of the lexicalized categories.Page 1, “Introduction”

- Another approach is lexicalized parsers (Collins, 2003; Chamiak, 2000) that describe each category with a lexical item, usually the head word.Page 2, “Introduction”

See all papers in Proc. ACL 2013 that mention lexicalized.

See all papers in Proc. ACL that mention lexicalized.

Back to top.

natural language

- Natural language parsing has typically been done with small sets of discrete categories such as NP and VP, but this representation does not capture the full syntactic nor semantic richness of linguistic phrases, and attempts to improve on this by lexicalizing phrases or splitting categories only partly address the problem at the cost of huge feature spaces and sparseness.Page 1, “Abstract”

- Syntactic parsing is a central task in natural language processing because of its importance in mediating between linguistic expression and meaning.Page 1, “Introduction”

- In many natural language systems, single words and n-grams are usefully described by their distributional similarities (Brown et al., 1992), among many others.Page 1, “Introduction”

- However, their model is lacking in that it cannot represent the recursive structure inherent in natural language .Page 2, “Introduction”

See all papers in Proc. ACL 2013 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

POS tags

- 3.1 and the POS tags come from a PCFG.Page 4, “Introduction”

- The standard RNN essentially ignores all POS tags and syntactic categories and each nonterminal node is associated with the same neural network (i.e., the weights across nodes are fully tied).Page 4, “Introduction”

- While this results in a powerful composition function that essentially depends on the words being combined, the number of model parameters explodes and the composition functions do not capture the syntactic commonalities between similar POS tags or syntactic categories.Page 5, “Introduction”

- This reduces the number of states from 15,276 to 12,061 states and 602 POS tags .Page 7, “Introduction”

See all papers in Proc. ACL 2013 that mention POS tags.

See all papers in Proc. ACL that mention POS tags.

Back to top.

Deep Learning

- sets of discrete states and recursive deep learning models that jointly learn classifiers and continuous feature representations for variable-sized inputs.Page 2, “Introduction”

- Deep Learning and Recursive Deep Learning Early attempts at using neural networks to describe phrases include Elman (1991), who used recurrent neural networks to create representations of sentences from a simple toy grammar and to analyze the linguistic expressiveness of the resulting representations.Page 2, “Introduction”

- The idea of untying has also been successfully used in deep learning applied to vision (Le et al., 2010).Page 3, “Introduction”

See all papers in Proc. ACL 2013 that mention Deep Learning.

See all papers in Proc. ACL that mention Deep Learning.

Back to top.

n-grams

- In many natural language systems, single words and n-grams are usefully described by their distributional similarities (Brown et al., 1992), among many others.Page 1, “Introduction”

- n-grams will never be seen during training, especially when n is large.Page 2, “Introduction”

- In this work, we present a new solution to learn features and phrase representations even for very long, unseen n-grams .Page 2, “Introduction”

See all papers in Proc. ACL 2013 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

objective function

- We will first briefly introduce single word vector representations and then describe the CVG objective function , tree scoring and inference.Page 3, “Introduction”

- The main objective function in Eq.Page 6, “Introduction”

- The objective function is not differentiable due to the hinge loss.Page 7, “Introduction”

See all papers in Proc. ACL 2013 that mention objective function.

See all papers in Proc. ACL that mention objective function.

Back to top.

parse tree

- The goal of supervised parsing is to learn a function 9 : 26 —> y, where X is the set of sentences and y is the set of all possible labeled binary parse trees .Page 4, “Introduction”

- The loss increases the more incorrect the proposed parse tree is (Goodman, 1998).Page 4, “Introduction”

- Assume, for now, we are given a labeled parse tree as shown in Fig.Page 4, “Introduction”

See all papers in Proc. ACL 2013 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.