Article Structure

Abstract

This paper proposes a nonparametric Bayesian method for inducing Part-of-Speech (POS) tags in dependency trees to improve the performance of statistical machine translation (SMT).

Introduction

In recent years, syntax-based SMT has made promising progress by employing either dependency parsing (Lin, 2004; Ding and Palmer, 2005; Quirk et al., 2005; Shen et al., 2008; Mi and Liu, 2010) or constituency parsing (Huang et al., 2006; Liu et al., 2006; Galley et al., 2006; Mi and Huang, 2008; Zhang et al., 2008; Cohn and Blunsom, 2009; Liu et al., 2009; Mi and Liu, 2010; Zhang et al., 2011) on the source side, the target side, or both.

Related Work

A number of unsupervised methods have been proposed for inducing POS tags.

Bilingual Infinite Tree Model

We propose a bilingual variant of the infinite tree model, the bilingual infinite tree model, which utilizes information from the other language.

Experiment

We tested our proposed models under the NTCIR-9 Japanese-to-English patent translation task (Goto et al., 2011), consisting of approximately 3.2 million bilingual sentences.

Discussion

5.1 Comparison to the IPA POS Tagset

Conclusion

We proposed a novel method for inducing POS tags for SMT.

Topics

POS tags

- In particular, we extend the monolingual infinite tree model (Finkel et al., 2007) to a bilingual scenario: each hidden state ( POS tag ) of a source-side dependency tree emits a source word together with its aligned target word, either jointly (joint model), or independently (independent model).Page 1, “Abstract”

- Evaluations of J apanese-to-English translation on the NTCIR-9 data show that our induced Japanese POS tags for dependency trees improve the performance of a forest-to-string SMT system.Page 1, “Abstract”

- However, dependency parsing, which is a popular choice for Japanese, can incorporate only shallow syntactic information, i.e., POS tags , compared with the richer syntactic phrasal categories in constituency parsing.Page 1, “Introduction”

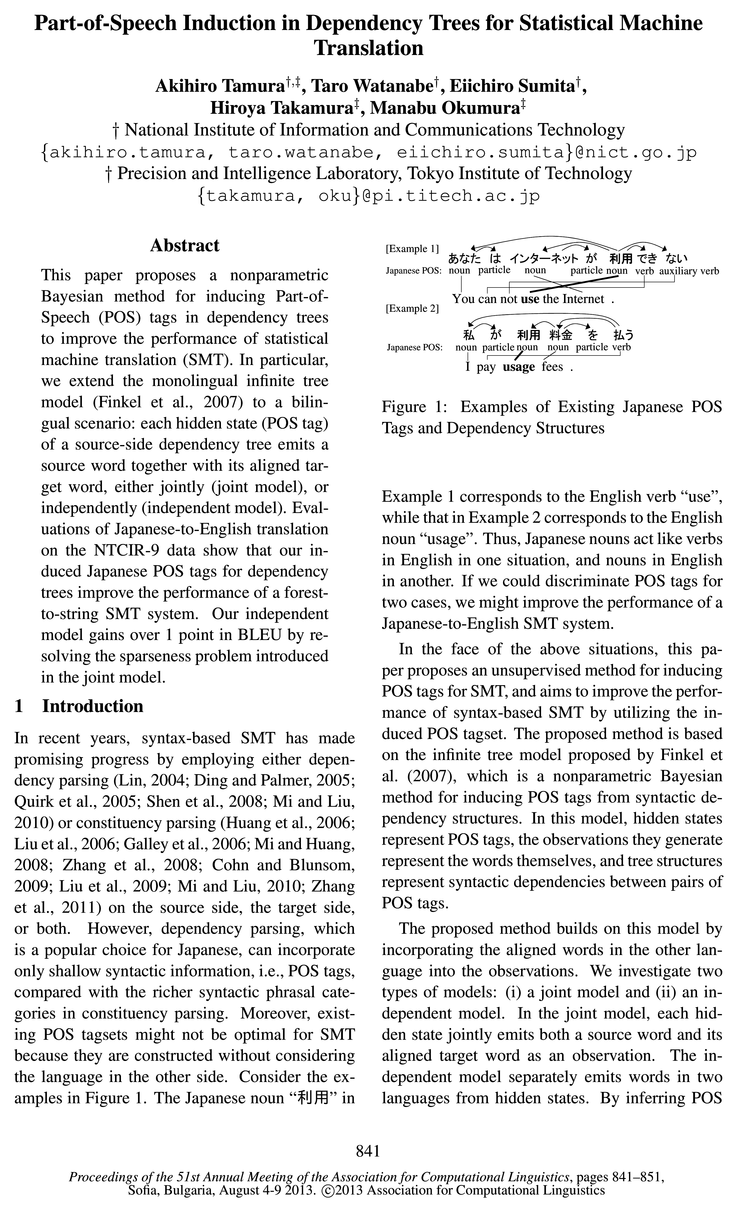

- Figure 1: Examples of Existing Japanese POS Tags and Dependency StructuresPage 1, “Introduction”

- If we could discriminate POS tags for two cases, we might improve the performance of a Japanese-to-English SMT system.Page 1, “Introduction”

- In the face of the above situations, this paper proposes an unsupervised method for inducing POS tags for SMT, and aims to improve the performance of syntax-based SMT by utilizing the induced POS tagset.Page 1, “Introduction”

- (2007), which is a nonparametric Bayesian method for inducing POS tags from syntactic dependency structures.Page 1, “Introduction”

- In this model, hidden states represent POS tags, the observations they generate represent the words themselves, and tree structures represent syntactic dependencies between pairs of POS tags .Page 1, “Introduction”

- tags based on bilingual observations, both models can induce POS tags by incorporating information from the other language.Page 2, “Introduction”

- Consider, for example, inducing a POS tag for the Japanese word “ fl PE” in Figure 1.Page 2, “Introduction”

- Under a monolingual induction method (e.g., the infinite tree model), the “W153” in Example 1 and 2 would both be assigned the same POS tag since they share the same observation.Page 2, “Introduction”

See all papers in Proc. ACL 2013 that mention POS tags.

See all papers in Proc. ACL that mention POS tags.

Back to top.

joint model

- In particular, we extend the monolingual infinite tree model (Finkel et al., 2007) to a bilingual scenario: each hidden state (POS tag) of a source-side dependency tree emits a source word together with its aligned target word, either jointly ( joint model ), or independently (independent model).Page 1, “Abstract”

- Our independent model gains over 1 point in BLEU by resolving the sparseness problem introduced in the joint model .Page 1, “Abstract”

- We investigate two types of models: (i) a joint model and (ii) an independent model.Page 1, “Introduction”

- In the joint model , each hidden state jointly emits both a source word and its aligned target word as an observation.Page 1, “Introduction”

- Figure 4: An Example of the Joint ModelPage 3, “Related Work”

- This paper proposes two types of models that differ in their processes for generating observations: the joint model and the independent model.Page 3, “Bilingual Infinite Tree Model”

- 3.1 Joint ModelPage 3, “Bilingual Infinite Tree Model”

- The joint model is a simple application of the infinite tree model under a bilingual scenario.Page 3, “Bilingual Infinite Tree Model”

- Observations in the joint model are the combination of source words and their aligned target words4, while observations in the monolingual infinite tree model represent only source words.Page 3, “Bilingual Infinite Tree Model”

- Figure 4 shows the process of generating Example 2 in Figure 1 through the joint model , where aligned words are jointly emitted as observations.Page 3, “Bilingual Infinite Tree Model”

- The joint model is prone to a data sparseness problem, since each observation is a combination of a source word and its aligned target word.Page 4, “Bilingual Infinite Tree Model”

See all papers in Proc. ACL 2013 that mention joint model.

See all papers in Proc. ACL that mention joint model.

Back to top.

dependency parser

- In recent years, syntax-based SMT has made promising progress by employing either dependency parsing (Lin, 2004; Ding and Palmer, 2005; Quirk et al., 2005; Shen et al., 2008; Mi and Liu, 2010) or constituency parsing (Huang et al., 2006; Liu et al., 2006; Galley et al., 2006; Mi and Huang, 2008; Zhang et al., 2008; Cohn and Blunsom, 2009; Liu et al., 2009; Mi and Liu, 2010; Zhang et al., 2011) on the source side, the target side, or both.Page 1, “Introduction”

- However, dependency parsing , which is a popular choice for Japanese, can incorporate only shallow syntactic information, i.e., POS tags, compared with the richer syntactic phrasal categories in constituency parsing.Page 1, “Introduction”

- In the training process, the following steps are performed sequentially: preprocessing, inducing a POS tagset for a source language, training a POS tagger and a dependency parser , and training a forest-to-string MT model.Page 6, “Experiment”

- The Japanese sentences are parsed using CaboCha (Kudo and Matsumoto, 2002), which generates dependency structures using a phrasal unit called a bunsetsug, rather than a word unit as in English or Chinese dependency parsing .Page 6, “Experiment”

- Training a POS Tagger and a Dependency ParserPage 7, “Experiment”

- In this step, we train a Japanese dependency parser from the 10,000 Japanese dependency trees with the induced POS tags which are derived from Step 2.Page 7, “Experiment”

- We employed a transition-based dependency parser which can jointly learn POS tagging and dependency parsing (Hatori et al., 2011) under an incremental framework“.Page 7, “Experiment”

- In this step, we train a forest-to-string MT model based on the learned dependency parser in Step 3.Page 7, “Experiment”

- All the Japanese and English sentences in the NTCIR-9 training data are segmented in the same way as in Step 1, and then each Japanese sentence is parsed by the dependency parser learned in Step 3, which simultaneously assigns induced POS tags and word dependencies.Page 7, “Experiment”

- The performance of our methods depends not only on the quality of the induced tag sets but also on the performance of the dependency parser learned in Step 3 of Section 4.1.Page 8, “Discussion”

- Thus we split the 10,000 data into the first 9,000 data for training and the remaining 1,000 for testing, and then a dependency parser was learned in the same way as in Step 3.Page 8, “Discussion”

See all papers in Proc. ACL 2013 that mention dependency parser.

See all papers in Proc. ACL that mention dependency parser.

Back to top.

dependency trees

- This paper proposes a nonparametric Bayesian method for inducing Part-of-Speech (POS) tags in dependency trees to improve the performance of statistical machine translation (SMT).Page 1, “Abstract”

- In particular, we extend the monolingual infinite tree model (Finkel et al., 2007) to a bilingual scenario: each hidden state (POS tag) of a source-side dependency tree emits a source word together with its aligned target word, either jointly (joint model), or independently (independent model).Page 1, “Abstract”

- Evaluations of J apanese-to-English translation on the NTCIR-9 data show that our induced Japanese POS tags for dependency trees improve the performance of a forest-to-string SMT system.Page 1, “Abstract”

- Experiments are carried out on the NTCIR-9 Japanese-to-English task using a binarized forest-to-string SMT system with dependency trees as its source side.Page 2, “Introduction”

- Specifically, the proposed model introduces bilingual observations by embedding the aligned target words in the source-side dependency trees .Page 3, “Bilingual Infinite Tree Model”

- We have assumed a completely unsupervised way of inducing POS tags in dependency trees .Page 4, “Bilingual Infinite Tree Model”

- Specifically, we introduce an auxiliary variable ut for each node in a dependency tree to limit the number of possible transitions.Page 5, “Bilingual Infinite Tree Model”

- Since we focus on the word-level POS induction, each bunsetsu-based dependency tree is converted into its corresponding word-based dependency tree using the following heuristic9: first, the last function word inside each bunsetsu is identified as the head wordlo; then, the remaining words are treated as dependents of the head word in the same bunsetsu; finally, a bunsetsu-based dependency structure is transformed to a word-based dependency structure by preserving the head/modifier relationships of the determined head words.Page 6, “Experiment”

- 9We could use other word-based dependency trees such as trees by the infinite PCFG model (Liang et al., 2007) and syntactic-head or semantic-head dependency trees in Nakazawa and Kurohashi (2012), although it is not our major focus.Page 6, “Experiment”

- In this step, we train a Japanese dependency parser from the 10,000 Japanese dependency trees with the induced POS tags which are derived from Step 2.Page 7, “Experiment”

- Note that the dependency accuracies are measured on the automatically parsed dependency trees , not on the syntactically correct gold standard trees.Page 8, “Discussion”

See all papers in Proc. ACL 2013 that mention dependency trees.

See all papers in Proc. ACL that mention dependency trees.

Back to top.

proposed models

- In the following, we overview the infinite tree model, which is the basis of our proposed model .Page 2, “Related Work”

- model (Finkel et al., 2007), where children are dependent only on their parents, used in our proposed modell .Page 2, “Related Work”

- Specifically, the proposed model introduces bilingual observations by embedding the aligned target words in the source-side dependency trees.Page 3, “Bilingual Infinite Tree Model”

- Note that POSS of target words are assigned by a POS tagger in the target language and are not inferred in the proposed model .Page 4, “Bilingual Infinite Tree Model”

- We tested our proposed models under the NTCIR-9 Japanese-to-English patent translation task (Goto et al., 2011), consisting of approximately 3.2 million bilingual sentences.Page 6, “Experiment”

- The results show that the proposed models can generate more favorable POS tagsets for SMT than an existing POS tagset.Page 8, “Experiment”

- Table 2 shows the number of the IPA POS tags used in the experiments and the POS tags induced by the proposed models .Page 8, “Discussion”

- These examples show that the proposed models can disambiguate POS tags that have different functions in English, whereas the IPA POS tagset treats them jointly.Page 8, “Discussion”

See all papers in Proc. ACL 2013 that mention proposed models.

See all papers in Proc. ACL that mention proposed models.

Back to top.

BLEU

- Our independent model gains over 1 point in BLEU by resolving the sparseness problem introduced in the joint model.Page 1, “Abstract”

- Further, our independent model achieves a more than 1 point gain in BLEU , which resolves the sparseness problem introduced by the bi-word observations.Page 2, “Introduction”

- Table 1: Performance on Japanese-to-English Translation Measured by BLEU (%)Page 7, “Experiment”

- Table 1 shows the performance for the test data measured by case sensitive BLEU (Papineni et al., 2002).Page 7, “Experiment”

- Under the Moses phrase-based SMT system (Koehn et al., 2007) with the default settings, we achieved a 26.80% BLEU score.Page 7, “Experiment”

- Further, when the full-level IPA POS tags12 were used in BS, the system achieved a 27.49% BLEU score, which is worse than the result using the second-level IPA POS tags.Page 8, “Experiment”

See all papers in Proc. ACL 2013 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

hyperparameters

- Our procedure alternates between sampling each of the following variables: the auxiliary variables u, the state assignments z, the transition probabilities 71', the shared DP parameters ,6, and the hyperparameters 040 and y.Page 5, “Bilingual Infinite Tree Model”

- 040 is parameterized by a gamma hyperprior with hyperparameters aa and 045.Page 6, “Bilingual Infinite Tree Model”

- 7 is parameterized by a gamma hyperprior with hyperparameters ya and 7b.Page 6, “Bilingual Infinite Tree Model”

- In sampling a0 and 7, hyperparameters aa, ab, ya, and 7;, are set to 2, 1, 1, and 1, respectively, which is the same setting in Gael et al.Page 7, “Experiment”

- The development test data is used to set up hyperparameters , i.e., to terminate tuning iterations.Page 7, “Experiment”

See all papers in Proc. ACL 2013 that mention hyperparameters.

See all papers in Proc. ACL that mention hyperparameters.

Back to top.

SMT system

- Evaluations of J apanese-to-English translation on the NTCIR-9 data show that our induced Japanese POS tags for dependency trees improve the performance of a forest-to-string SMT system .Page 1, “Abstract”

- If we could discriminate POS tags for two cases, we might improve the performance of a Japanese-to-English SMT system .Page 1, “Introduction”

- Experiments are carried out on the NTCIR-9 Japanese-to-English task using a binarized forest-to-string SMT system with dependency trees as its source side.Page 2, “Introduction”

- We evaluated our bilingual infinite tree model for POS induction using an in-house developed syntax-based forest-to-string SMT system .Page 6, “Experiment”

- Under the Moses phrase-based SMT system (Koehn et al., 2007) with the default settings, we achieved a 26.80% BLEU score.Page 7, “Experiment”

See all papers in Proc. ACL 2013 that mention SMT system.

See all papers in Proc. ACL that mention SMT system.

Back to top.

dynamic programming

- Inference is efficiently carried out by beam sampling (Gael et al., 2008), which combines slice sampling and dynamic programming .Page 2, “Introduction”

- Beam sampling limits the number of possible state transitions for each node to a finite number using slice sampling (Neal, 2003), and then efficiently samples whole hidden state transitions using dynamic programming .Page 5, “Bilingual Infinite Tree Model”

- We can parallelize procedures in sampling u and z because the slice sampling for u and the dynamic programing for z are independent for each sentence.Page 5, “Bilingual Infinite Tree Model”

- ,T) using dynamic programming as follows: In the joint model, p(zt|:c0(t), no.0») ocPage 5, “Bilingual Infinite Tree Model”

See all papers in Proc. ACL 2013 that mention dynamic programming.

See all papers in Proc. ACL that mention dynamic programming.

Back to top.

Gibbs sampling

- (2007) presented a sampling algorithm for the infinite tree model, which is based on the Gibbs sampling in the direct assignment representation for iHMM (Teh et al., 2006).Page 4, “Bilingual Infinite Tree Model”

- Gibbs sampling , individual hidden state variables are resampled conditioned on all other variables.Page 5, “Bilingual Infinite Tree Model”

- Beam sampling does not suffer from slow convergence as in Gibbs sampling by sampling the whole state variables at once.Page 5, “Bilingual Infinite Tree Model”

- (2008) showed that beam sampling is more robust to initialization and hyperpa-rameter choice than Gibbs sampling .Page 5, “Bilingual Infinite Tree Model”

See all papers in Proc. ACL 2013 that mention Gibbs sampling.

See all papers in Proc. ACL that mention Gibbs sampling.

Back to top.