Article Structure

Abstract

A central challenge in semantic parsing is handling the myriad ways in which knowledge base predicates can be expressed.

Introduction

We consider the semantic parsing problem of mapping natural language utterances into logical forms to be executed on a knowledge base (KB) (Zelle and Mooney, 1996; Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Kwiatkowski et al., 2010).

Setup

Our task is as follows: Given (i) a knowledge base IC, and (ii) a training set of question-answer pairs 3/1)};1, output a semantic parser that maps new questions at to answers 3/ via latent logical forms 2.

Model overview

We now present the general framework for semantic parsing via paraphrasing, including the model and the learning algorithm.

Canonical utterance construction

We construct canonical utterances in two steps.

Paraphrasing

Once the candidate set of logical forms paired with canonical utterances is constructed, our problem is reduced to scoring pairs (0, 2) based on a paraphrase model.

Empirical evaluation

In this section, we evaluate our system on WEBQUESTIONS and FREE917.

Discussion

In this work, we approach the problem of semantic parsing from a paraphrasing viewpoint.

Topics

logical forms

- Given an input utterance, we first use a simple method to deterministically generate a set of candidate logical forms with a canonical realization in natural language for each.Page 1, “Abstract”

- Then, we use a paraphrase model to choose the realization that best paraphrases the input, and output the corresponding logical form .Page 1, “Abstract”

- We consider the semantic parsing problem of mapping natural language utterances into logical forms to be executed on a knowledge base (KB) (Zelle and Mooney, 1996; Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Kwiatkowski et al., 2010).Page 1, “Introduction”

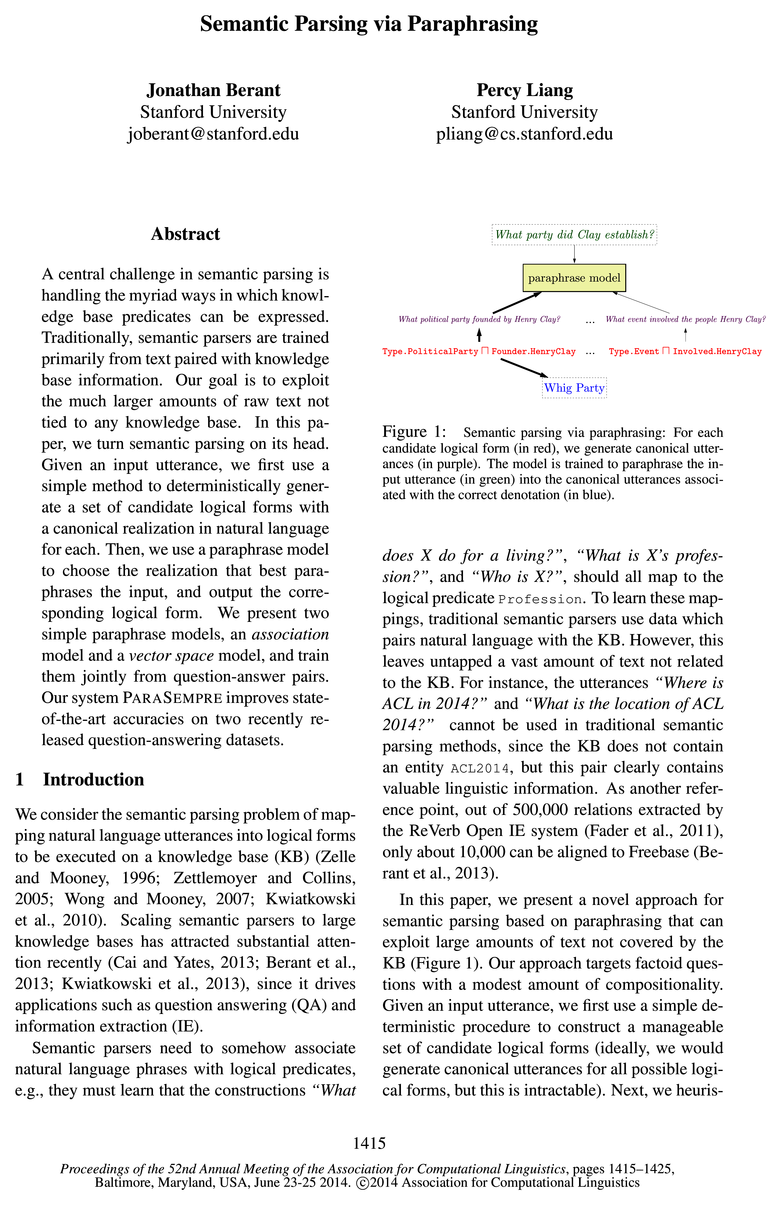

- 7igure 1: Semantic parsing via paraphrasing: For each andidate logical form (in red), we generate canonical utter-nces (in purple).Page 1, “Introduction”

- liven an input utterance, we first use a simple de-erministic procedure to construct a manageable et of candidate logical forms (ideally, we would generate canonical utterances for all possible logi-tal forms, but this is intractable).Page 1, “Introduction”

- (a) Traditionally, semantic parsing maps utterances directly to logical forms .Page 2, “Introduction”

- (2013) map the utterance to an underspecified logical form , and perform ontology matching to handle the mismatch.Page 2, “Introduction”

- (c) We approach the problem in the other direction, generating canonical utterances for logical forms , and use paraphrase models to handle the mismatch.Page 2, “Introduction”

- tically generate canonical utterances for each logical form based on the text descriptions of predicates from the KB.Page 2, “Introduction”

- Finally, we choose the canonical utterance that best paraphrases the input utterance, and thereby the logical form that generated it.Page 2, “Introduction”

- (2013) first maps utterances to a domain-independent intermediate logical form, and then performs ontology matching to produce the final logical form .Page 2, “Introduction”

See all papers in Proc. ACL 2014 that mention logical forms.

See all papers in Proc. ACL that mention logical forms.

Back to top.

semantic parsing

- A central challenge in semantic parsing is handling the myriad ways in which knowledge base predicates can be expressed.Page 1, “Abstract”

- Traditionally, semantic parsers are trained primarily from text paired with knowledge base information.Page 1, “Abstract”

- In this paper, we turn semantic parsing on its head.Page 1, “Abstract”

- We consider the semantic parsing problem of mapping natural language utterances into logical forms to be executed on a knowledge base (KB) (Zelle and Mooney, 1996; Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Kwiatkowski et al., 2010).Page 1, “Introduction”

- Scaling semantic parsers to large knowledge bases has attracted substantial attention recently (Cai and Yates, 2013; Berant et al., 2013; Kwiatkowski et al., 2013), since it drives applications such as question answering (QA) and information extraction (IE).Page 1, “Introduction”

- Semantic parsers need to somehow associate natural language phrases with logical predicates, e.g., they must learn that the constructions “WhatPage 1, “Introduction”

- 7igure 1: Semantic parsing via paraphrasing: For each andidate logical form (in red), we generate canonical utter-nces (in purple).Page 1, “Introduction”

- To learn these map-tings, traditional semantic parsers use data which airs natural language with the KB.Page 1, “Introduction”

- Figure 2: The main challenge in semantic parsing is coping with the mismatch between language and the KB.Page 2, “Introduction”

- (a) Traditionally, semantic parsing maps utterances directly to logical forms.Page 2, “Introduction”

- Our work relates to recent lines of research in semantic parsing and question answering.Page 2, “Introduction”

See all papers in Proc. ACL 2014 that mention semantic parsing.

See all papers in Proc. ACL that mention semantic parsing.

Back to top.

natural language

- Given an input utterance, we first use a simple method to deterministically generate a set of candidate logical forms with a canonical realization in natural language for each.Page 1, “Abstract”

- We consider the semantic parsing problem of mapping natural language utterances into logical forms to be executed on a knowledge base (KB) (Zelle and Mooney, 1996; Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Kwiatkowski et al., 2010).Page 1, “Introduction”

- Semantic parsers need to somehow associate natural language phrases with logical predicates, e.g., they must learn that the constructions “WhatPage 1, “Introduction”

- To learn these map-tings, traditional semantic parsers use data which airs natural language with the KB.Page 1, “Introduction”

- date logical forms Zac, and then for each 2 E 236 generate a small set of canonical natural language utterances Cz.Page 3, “Model overview”

- Second, natural language utterances often do not express predicates explicitly, e. g., the question “What is Italy’s money?” expresses the binary predicate CurrencyOf with a possessive construction.Page 3, “Model overview”

- Our framework accommodates any paraphrasing method, and in this paper we propose an association model that learns to associate natural language phrases that co-occur frequently in a monolingual parallel corpus, combined with a vector space model, which learns to score the similarity between vector representations of natural language utterances (Section 5).Page 3, “Model overview”

- Utterance generation While mapping general language utterances to logical forms is hard, we observe that it is much easier to generate a canonical natural language utterances of our choice given a logical form.Page 4, “Canonical utterance construction”

- use a KB over natural language extractions rather than a formal KB and so querying the KB does not require a generation step — they paraphrase questions to KB entries directly.Page 9, “Discussion”

See all papers in Proc. ACL 2014 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

vector space

- We present two simple paraphrase models, an association model and a vector space model, and train them jointly from question-answer pairs.Page 1, “Abstract”

- We use two complementary paraphrase models: an association model based on aligned phrase pairs extracted from a monolingual parallel corpus, and a vector space model, which represents each utterance as a vector and learns a similarity score between them.Page 2, “Introduction”

- Our framework accommodates any paraphrasing method, and in this paper we propose an association model that learns to associate natural language phrases that co-occur frequently in a monolingual parallel corpus, combined with a vector space model, which learns to score the similarity between vector representations of natural language utterances (Section 5).Page 3, “Model overview”

- The NLP paraphrase literature is vast and ranges from simple methods employing surface features (Wan et al., 2006), through vector space models (Socher et al., 2011), to latent variable models (Das and Smith, 2009; Wang and Manning, 2010; Stern and Dagan, 2011).Page 5, “Paraphrasing”

- Our paraphrase model decomposes into an association model and a vector space model:Page 5, “Paraphrasing”

- 5.2 Vector space modelPage 6, “Paraphrasing”

- We now introduce a vector space (VS) model, which assigns a vector representation for each utterance, and learns a scoring function that ranks paraphrase candidates.Page 6, “Paraphrasing”

- In summary, while the association model aligns particular phrases to one another, the vector space model provides a soft vector-based representation for utterances.Page 7, “Paraphrasing”

See all papers in Proc. ACL 2014 that mention vector space.

See all papers in Proc. ACL that mention vector space.

Back to top.

knowledge base

- A central challenge in semantic parsing is handling the myriad ways in which knowledge base predicates can be expressed.Page 1, “Abstract”

- Traditionally, semantic parsers are trained primarily from text paired with knowledge base information.Page 1, “Abstract”

- Our goal is to exploit the much larger amounts of raw text not tied to any knowledge base .Page 1, “Abstract”

- We consider the semantic parsing problem of mapping natural language utterances into logical forms to be executed on a knowledge base (KB) (Zelle and Mooney, 1996; Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Kwiatkowski et al., 2010).Page 1, “Introduction”

- Scaling semantic parsers to large knowledge bases has attracted substantial attention recently (Cai and Yates, 2013; Berant et al., 2013; Kwiatkowski et al., 2013), since it drives applications such as question answering (QA) and information extraction (IE).Page 1, “Introduction”

- Our task is as follows: Given (i) a knowledge base IC, and (ii) a training set of question-answer pairs 3/1)};1, output a semantic parser that maps new questions at to answers 3/ via latent logical forms 2.Page 2, “Setup”

- A knowledge base IC is a set of assertions (61,19, 62) E 5 X ’P X 5 (e.g., (BillGates,PlaceOfBirth, Seattle».Page 2, “Setup”

See all papers in Proc. ACL 2014 that mention knowledge base.

See all papers in Proc. ACL that mention knowledge base.

Back to top.

development set

- We tuned the L1 regularization strength, developed features, and ran analysis experiments on the development set (averaging across random splits).Page 7, “Empirical evaluation”

- To further examine this, we ran BCFL13 on the development set , allowing it to use only predicates from logical forms suggested by our logical form construction step.Page 7, “Empirical evaluation”

- This improved oracle accuracy on the development set to 64.5%, but accuracy was 32.2%.Page 7, “Empirical evaluation”

- Table 62 Results for ablations and baselines on development set .Page 8, “Empirical evaluation”

- Error analysis We sampled examples from the development set to examine the main reasons PARASEMPRE makes errors.Page 8, “Empirical evaluation”

See all papers in Proc. ACL 2014 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.

phrase pairs

- We use two complementary paraphrase models: an association model based on aligned phrase pairs extracted from a monolingual parallel corpus, and a vector space model, which represents each utterance as a vector and learns a similarity score between them.Page 2, “Introduction”

- We define associations in cc and c primarily by looking up phrase pairs in a phrase table constructed using the PARALEX corpus (Fader et al., 2013).Page 5, “Paraphrasing”

- We use the word alignments to construct a phrase table by applying the consistent phrase pair heuristic (Och and Ney, 2004) to all 5-grams.Page 6, “Paraphrasing”

- This results in a phrase table with approximately 1.3 million phrase pairs .Page 6, “Paraphrasing”

- This allows us to learn paraphrases for words that appear in our datasets but are not covered by the phrase table, and to handle nominalizations for phrase pairs such as “Who designed the game of life?” and “What game designer is the designer of the game of life?Page 6, “Paraphrasing”

See all papers in Proc. ACL 2014 that mention phrase pairs.

See all papers in Proc. ACL that mention phrase pairs.

Back to top.

question answering

- Scaling semantic parsers to large knowledge bases has attracted substantial attention recently (Cai and Yates, 2013; Berant et al., 2013; Kwiatkowski et al., 2013), since it drives applications such as question answering (QA) and information extraction (IE).Page 1, “Introduction”

- Our work relates to recent lines of research in semantic parsing and question answering .Page 2, “Introduction”

- While it has been shown that paraphrasing methods are useful for question answering (Harabagiu and Hickl, 2006) and relation extraction (Romano et al., 2006), this is, to the best of our knowledge, the first paper to perform semantic parsing through paraphrasing.Page 9, “Discussion”

- We believe that our approach is particularly suitable for scenarios such as factoid question answering , where the space of logical forms is somewhat constrained and a few generation rules suffice to reduce the problem to paraphrasing.Page 9, “Discussion”

- who presented a paraphrase-driven question answering system.Page 9, “Discussion”

See all papers in Proc. ACL 2014 that mention question answering.

See all papers in Proc. ACL that mention question answering.

Back to top.

phrase table

- We define associations in cc and c primarily by looking up phrase pairs in a phrase table constructed using the PARALEX corpus (Fader et al., 2013).Page 5, “Paraphrasing”

- We use the word alignments to construct a phrase table by applying the consistent phrase pair heuristic (Och and Ney, 2004) to all 5-grams.Page 6, “Paraphrasing”

- This results in a phrase table with approximately 1.3 million phrase pairs.Page 6, “Paraphrasing”

- This allows us to learn paraphrases for words that appear in our datasets but are not covered by the phrase table , and to handle nominalizations for phrase pairs such as “Who designed the game of life?” and “What game designer is the designer of the game of life?Page 6, “Paraphrasing”

See all papers in Proc. ACL 2014 that mention phrase table.

See all papers in Proc. ACL that mention phrase table.

Back to top.

POS tag

- Deletions Deleted lemma and POS tagPage 6, “Paraphrasing”

- £13,; j and ci/zj/ denote spans from a: and c. pos(:1:¢;j) and lemma(:1:i; j) denote the POS tag and lemma sequence of £13,; 3'.Page 6, “Paraphrasing”

- For a pair (at, c), we also consider as candidate associations the set [3 (represented implicitly), which contains token pairs (510,, ci/) such that at, and oil share the same lemma, the same POS tag , or are linked through a derivation link on WordNet (Fellbaum, 1998).Page 6, “Paraphrasing”

- j deletion features for their lemmas and POS tags .Page 6, “Paraphrasing”

See all papers in Proc. ACL 2014 that mention POS tag.

See all papers in Proc. ACL that mention POS tag.

Back to top.

vector representations

- Our framework accommodates any paraphrasing method, and in this paper we propose an association model that learns to associate natural language phrases that co-occur frequently in a monolingual parallel corpus, combined with a vector space model, which learns to score the similarity between vector representations of natural language utterances (Section 5).Page 3, “Model overview”

- We now introduce a vector space (VS) model, which assigns a vector representation for each utterance, and learns a scoring function that ranks paraphrase candidates.Page 6, “Paraphrasing”

- We start by constructing vector representations of words.Page 6, “Paraphrasing”

- We can now estimate a paraphrase score for two utterances cc and 0 via a weighted combination of the components of the vector representations:Page 6, “Paraphrasing”

See all papers in Proc. ACL 2014 that mention vector representations.

See all papers in Proc. ACL that mention vector representations.

Back to top.

latent variables

- Many existing paraphrase models introduce latent variables to describe the derivation of c from :c, e.g., with transformations (Heilman and Smith, 2010; Stern and Dagan, 2011) or alignments (Haghighi et al., 2005; Das and Smith, 2009; Chang et al., 2010).Page 3, “Model overview”

- However, we opt for a simpler paraphrase model without latent variables in the interest of efficiency.Page 3, “Model overview”

- The NLP paraphrase literature is vast and ranges from simple methods employing surface features (Wan et al., 2006), through vector space models (Socher et al., 2011), to latent variable models (Das and Smith, 2009; Wang and Manning, 2010; Stern and Dagan, 2011).Page 5, “Paraphrasing”

See all papers in Proc. ACL 2014 that mention latent variables.

See all papers in Proc. ACL that mention latent variables.

Back to top.

parallel corpus

- We use two complementary paraphrase models: an association model based on aligned phrase pairs extracted from a monolingual parallel corpus , and a vector space model, which represents each utterance as a vector and learns a similarity score between them.Page 2, “Introduction”

- (2013) presented a QA system that maps questions onto simple queries against Open IE extractions, by learning paraphrases from a large monolingual parallel corpus , and performing a single paraphrasing step.Page 2, “Introduction”

- Our framework accommodates any paraphrasing method, and in this paper we propose an association model that learns to associate natural language phrases that co-occur frequently in a monolingual parallel corpus , combined with a vector space model, which learns to score the similarity between vector representations of natural language utterances (Section 5).Page 3, “Model overview”

See all papers in Proc. ACL 2014 that mention parallel corpus.

See all papers in Proc. ACL that mention parallel corpus.

Back to top.