Article Structure

Abstract

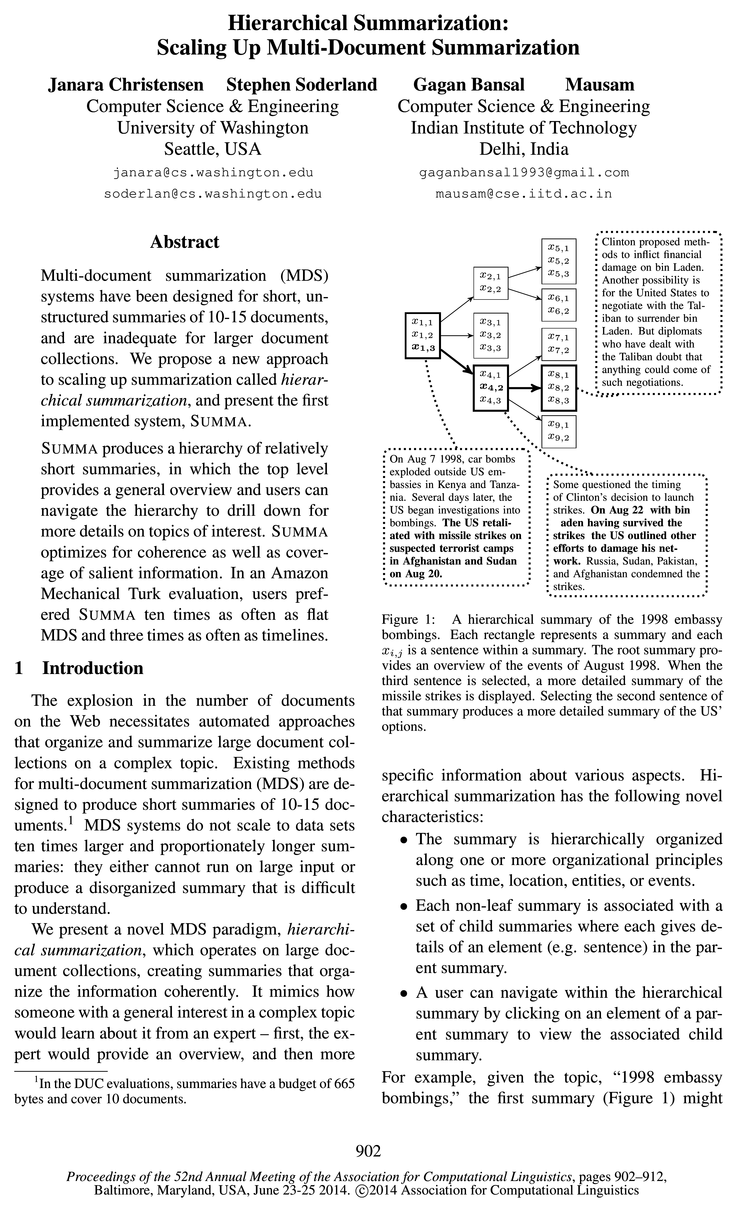

Multi-document summarization (MDS) systems have been designed for short, unstructured summaries of 10-15 documents, and are inadequate for larger document collections.

Introduction

The explosion in the number of documents on the Web necessitates automated approaches that organize and summarize large document collections on a complex topic.

Hierarchical Summarization

We propose a new task for large-scale summarization called hierarchical summarization.

Hierarchical Clustering

Having defined the task, we now describe the methodology behind our implementation, SUMMA.

Summarizing Within the Hierarchy

After the sentences are clustered, we have a structure for the hierarchical summary that dictates the number of summaries and the number of sentences

Experiments

Our experiments are designed to evaluate how effective hierarchical summarization is in summarizing a large, complex topic and how well this helps users learn about the topic.

Related Work

Traditional approaches to large-scale summarization have included flat summaries and timelines.

Conclusions

We have introduced a new paradigm for large-scale summarization called hierarchical summarization, which allows a user to navigate a hierarchy of relatively short summaries.

Topics

objective function

- SUMMA hierarchically clusters the sentences by time, and then summarizes the clusters using an objective function that optimizes salience and coherence.Page 2, “Introduction”

- 4.4 Objective FunctionPage 5, “Summarizing Within the Hierarchy”

- Having estimated salience, redundancy, and two forms of coherence, we can now put this information together into a single objective function that measures the quality of a candidate hierarchical summary.Page 5, “Summarizing Within the Hierarchy”

- Intuitively, the objective function should balance salience and coherence.Page 5, “Summarizing Within the Hierarchy”

- Optimizing this objective function is NP-hard, so we approximate a solution by using beam search over the space of partial hierarchical summaries.Page 5, “Summarizing Within the Hierarchy”

See all papers in Proc. ACL 2014 that mention objective function.

See all papers in Proc. ACL that mention objective function.

Back to top.

Amazon Mechanical Turk

- In an Amazon Mechanical Turk evaluation, users pref-ered SUMMA ten times as often as flat MDS and three times as often as timelines.Page 1, “Abstract”

- We conducted an Amazon Mechanical Turk (AMT) evaluation where AMT workers compared the output of SUMMA to that of timelines and flat summaries.Page 2, “Introduction”

- We hired Amazon Mechanical Turk (AMT) workers and assigned two topics to each worker.Page 6, “Experiments”

See all papers in Proc. ACL 2014 that mention Amazon Mechanical Turk.

See all papers in Proc. ACL that mention Amazon Mechanical Turk.

Back to top.

coreference

- An edge from sentence 3,- to sj with positive weight indicates that sj may follow 3,- in a coherent summary, e. g. continued mention of an event or entity, or coreference link between 3,- and sj.Page 5, “Summarizing Within the Hierarchy”

- A negative edge indicates an unfulfilled discourse cue or coreference mention.Page 5, “Summarizing Within the Hierarchy”

- These are coreference mentions 0r discourse cues where none of the sentences read before (either in an ancestor summary or in the current summary) contain an antecedent:Page 5, “Summarizing Within the Hierarchy”

See all papers in Proc. ACL 2014 that mention coreference.

See all papers in Proc. ACL that mention coreference.

Back to top.

Mechanical Turk

- In an Amazon Mechanical Turk evaluation, users pref-ered SUMMA ten times as often as flat MDS and three times as often as timelines.Page 1, “Abstract”

- We conducted an Amazon Mechanical Turk (AMT) evaluation where AMT workers compared the output of SUMMA to that of timelines and flat summaries.Page 2, “Introduction”

- We hired Amazon Mechanical Turk (AMT) workers and assigned two topics to each worker.Page 6, “Experiments”

See all papers in Proc. ACL 2014 that mention Mechanical Turk.

See all papers in Proc. ACL that mention Mechanical Turk.

Back to top.