Article Structure

Abstract

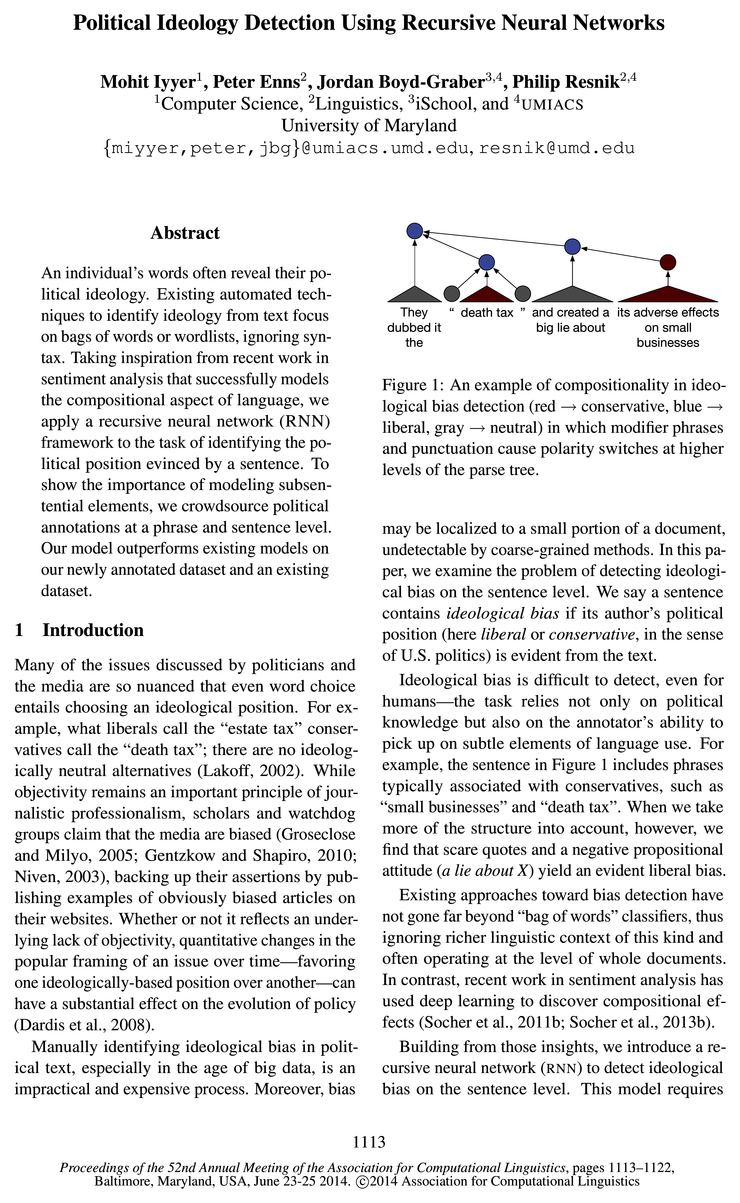

An individual’s words often reveal their political ideology.

Introduction

Many of the issues discussed by politicians and the media are so nuanced that even word choice entails choosing an ideological position.

Recursive Neural Networks

Recursive neural networks (RNNs) are machine learning models that capture syntactic and semantic composition.

Datasets

We performed initial experiments on a dataset of Congressional debates that has annotations on the author level for partisanship, not ideology.

Experiments

In this section we describe our experimental framework.

Where Compositionality Helps Detect Ideological Bias

In this section, we examine the RNN models to see why they improve over our baselines.

Related Work

A growing NLP subfield detects private states such as opinions, sentiment, and beliefs (Wilson et al., 2005; Pang and Lee, 2008) from text.

Conclusion

In this paper we apply recursive neural networks to political ideology detection, a problem where previous work relies heavily on bag-of-words models and hand-designed lexica.

Topics

bag-of-words

- Experimental Results Table 1 shows the RNN models outperforming the bag-of-words baselines as well as the word2vec baseline on both datasets.Page 7, “Where Compositionality Helps Detect Ideological Bias”

- We obtain better results on Convote than on IBC with both bag-of-words and RNN models.Page 7, “Where Compositionality Helps Detect Ideological Bias”

- In general, work in this category tends to combine traditional surface lexical modeling (e. g., bag-of-words ) with hand-designed syntactic features or lexicons.Page 7, “Related Work”

- Most previous work on ideology detection ignores the syntactic structure of the language in use in favor of familiar bag-of-words representations forPage 7, “Related Work”

- E.g., Gerrish and Blei (2011) predict the voting patterns of Congress members based on bag-of-words representations of bills and inferred political leanings of those members.Page 8, “Related Work”

- (2013) detect biased words in sentences using indicator features for bias cues such as hedges and fac-tive verbs in addition to standard bag-of-words and part-of-speech features.Page 8, “Related Work”

- In this paper we apply recursive neural networks to political ideology detection, a problem where previous work relies heavily on bag-of-words models and hand-designed lexica.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention bag-of-words.

See all papers in Proc. ACL that mention bag-of-words.

Back to top.

sentence-level

- They have achieved state-of-the-art performance on a variety of sentence-level NLP tasks, including sentiment analysis, paraphrase detection, and parsing (Socher et al., 2011a; Hermann and Blunsom, 2013).Page 2, “Recursive Neural Networks”

- Each of these models have the same task: to predict sentence-level ideology labels for sentences in a test set.Page 5, “Experiments”

- Table 1: Sentence-level bias detection accuracy.Page 6, “Experiments”

- RNNl initializes all parameters randomly and uses only sentence-level labels for training.Page 6, “Experiments”

- 0 RNNl-(W2V) uses the word2vec initialization described in Section 2.2 but is also trained on only sentence-level labels.Page 6, “Experiments”

- Finally, combining sentence-level and document-level models might improve bias detection at both levels.Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention sentence-level.

See all papers in Proc. ACL that mention sentence-level.

Back to top.

embeddings

- The word2vec embeddings have linear relationships (e.g., the closest vectors to the average ofPage 3, “Recursive Neural Networks”

- o LR-(W2V) is a logistic regression model trained on the average of the pretrained word embeddings for each sentence (Section 2.2).Page 6, “Experiments”

- 0 RNN2-(W2V) is initialized using word2vec embeddings and also includes annotated phrase labels in its training.Page 6, “Experiments”

- Initializing the RNN We matrix with word2vec embeddings improves accuracy over randomly initialization by 1%.Page 7, “Where Compositionality Helps Detect Ideological Bias”

See all papers in Proc. ACL 2014 that mention embeddings.

See all papers in Proc. ACL that mention embeddings.

Back to top.

logistic regression

- o LRl, our most basic logistic regression baseline, uses only bag of words (BOW) features.Page 6, “Experiments”

- o LR-(W2V) is a logistic regression model trained on the average of the pretrained word embeddings for each sentence (Section 2.2).Page 6, “Experiments”

- The RNN framework, adding phrase-level data, and initializing with word2vec all improve performance over logistic regression baselines.Page 6, “Experiments”

- We train an Lg-regularized logistic regression model over these concatenated vectors to obtain final accuracy numbers on the sentence level.Page 6, “Experiments”

See all papers in Proc. ACL 2014 that mention logistic regression.

See all papers in Proc. ACL that mention logistic regression.

Back to top.

neural network

- Taking inspiration from recent work in sentiment analysis that successfully models the compositional aspect of language, we apply a recursive neural network (RNN) framework to the task of identifying the political position evinced by a sentence.Page 1, “Abstract”

- Building from those insights, we introduce a recursive neural network (RNN) to detect ideological bias on the sentence level.Page 1, “Introduction”

- Recursive neural networks (RNNs) are machine learning models that capture syntactic and semantic composition.Page 2, “Recursive Neural Networks”

- In this paper we apply recursive neural networks to political ideology detection, a problem where previous work relies heavily on bag-of-words models and hand-designed lexica.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention neural network.

See all papers in Proc. ACL that mention neural network.

Back to top.

recursive

- Taking inspiration from recent work in sentiment analysis that successfully models the compositional aspect of language, we apply a recursive neural network (RNN) framework to the task of identifying the political position evinced by a sentence.Page 1, “Abstract”

- Building from those insights, we introduce a recursive neural network (RNN) to detect ideological bias on the sentence level.Page 1, “Introduction”

- Recursive neural networks (RNNs) are machine learning models that capture syntactic and semantic composition.Page 2, “Recursive Neural Networks”

- In this paper we apply recursive neural networks to political ideology detection, a problem where previous work relies heavily on bag-of-words models and hand-designed lexica.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention recursive.

See all papers in Proc. ACL that mention recursive.

Back to top.

recursive neural

- Taking inspiration from recent work in sentiment analysis that successfully models the compositional aspect of language, we apply a recursive neural network (RNN) framework to the task of identifying the political position evinced by a sentence.Page 1, “Abstract”

- Building from those insights, we introduce a recursive neural network (RNN) to detect ideological bias on the sentence level.Page 1, “Introduction”

- Recursive neural networks (RNNs) are machine learning models that capture syntactic and semantic composition.Page 2, “Recursive Neural Networks”

- In this paper we apply recursive neural networks to political ideology detection, a problem where previous work relies heavily on bag-of-words models and hand-designed lexica.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention recursive neural.

See all papers in Proc. ACL that mention recursive neural.

Back to top.

recursive neural network

- Taking inspiration from recent work in sentiment analysis that successfully models the compositional aspect of language, we apply a recursive neural network (RNN) framework to the task of identifying the political position evinced by a sentence.Page 1, “Abstract”

- Building from those insights, we introduce a recursive neural network (RNN) to detect ideological bias on the sentence level.Page 1, “Introduction”

- Recursive neural networks (RNNs) are machine learning models that capture syntactic and semantic composition.Page 2, “Recursive Neural Networks”

- In this paper we apply recursive neural networks to political ideology detection, a problem where previous work relies heavily on bag-of-words models and hand-designed lexica.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention recursive neural network.

See all papers in Proc. ACL that mention recursive neural network.

Back to top.

sentiment analysis

- Taking inspiration from recent work in sentiment analysis that successfully models the compositional aspect of language, we apply a recursive neural network (RNN) framework to the task of identifying the political position evinced by a sentence.Page 1, “Abstract”

- In contrast, recent work in sentiment analysis has used deep learning to discover compositional effects (Socher et al., 201 lb; Socher et al., 2013b).Page 1, “Introduction”

- They have achieved state-of-the-art performance on a variety of sentence-level NLP tasks, including sentiment analysis , paraphrase detection, and parsing (Socher et al., 2011a; Hermann and Blunsom, 2013).Page 2, “Recursive Neural Networks”

- They use syntactic dependency relation features combined with lexical information to achieve then state-of-the-art performance on standard sentiment analysis datasets.Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention sentiment analysis.

See all papers in Proc. ACL that mention sentiment analysis.

Back to top.

word embedding

- The word-level vectors 5% and :35 come from a d x V dimensional word embedding matrix We, where V is the size of the vocabulary.Page 2, “Recursive Neural Networks”

- Random The most straightforward choice is to initialize the word embedding matrix We and composition matrices WL and WR randomly such that without any training, representations for words and phrases are arbitrarily projected into the vector space.Page 3, “Recursive Neural Networks”

- word2vec The other alternative is to initialize the word embedding matrix We with values that reflect the meanings of the associated word types.Page 3, “Recursive Neural Networks”

- o LR-(W2V) is a logistic regression model trained on the average of the pretrained word embeddings for each sentence (Section 2.2).Page 6, “Experiments”

See all papers in Proc. ACL 2014 that mention word embedding.

See all papers in Proc. ACL that mention word embedding.

Back to top.

bigram

- Their features come from the Linguistic Inquiry and Word Count lexicon (LIWC) (Pennebaker et al., 2001), as well as from lists of “sticky bigrams” (Brown et al., 1992) strongly associated with one party or another (e. g., “illegal aliens” implies conservative, “universal healthcare” implies liberal).Page 4, “Datasets”

- We first extract the subset of sentences that contains any words in the LIWC categories of Negative Emotion, Positive Emotion, Causation, Anger, and Kill verbs.3 After computing a list of the top 100 sticky bigrams for each category, ranked by log-likelihood ratio, and selecting another subset from the original data that included only sentences containing at least one sticky bigram , we take the union of the two subsets.Page 4, “Datasets”

- They use an HMM-based model, defining the states as a set of fine-grained political ideologies, and rely on a closed set of lexical bigram features associated with each ideology, inferred from a manually labeled ideological books corpus.Page 8, “Related Work”

See all papers in Proc. ACL 2014 that mention bigram.

See all papers in Proc. ACL that mention bigram.

Back to top.

objective function

- This induces a supervised objective function over all sentences: a regularized sum over all node losses normalized by the number of nodes N in the training set,Page 3, “Recursive Neural Networks”

- Due to this discrepancy, the objective function in Eq.Page 5, “Datasets”

- For this model, we also introduce a hyperparameter 6 that weights the error at annotated nodes (1 — 6) higher than the error at unannotated nodes (6); since we have more confidence in the annotated labels, we want them to contribute more towards the objective function .Page 6, “Experiments”

See all papers in Proc. ACL 2014 that mention objective function.

See all papers in Proc. ACL that mention objective function.

Back to top.

parse tree

- Figure 1: An example of compositionality in ideological bias detection (red —> conservative, blue —> liberal, gray —> neutral) in which modifier phrases and punctuation cause polarity switches at higher levels of the parse tree .Page 1, “Introduction”

- Based on a parse tree , these words form phrases p (Figure 2).Page 2, “Recursive Neural Networks”

- The increased accuracy suggests that the trained RNNs are capable of detecting bias polarity switches at higher levels in parse trees .Page 7, “Where Compositionality Helps Detect Ideological Bias”

See all papers in Proc. ACL 2014 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

vector space

- If an element of this vector space , and, represents a sentence with liberal bias, its vector should be distinct from the vector :37. of a conservative-leaning sentence.Page 2, “Recursive Neural Networks”

- Random The most straightforward choice is to initialize the word embedding matrix We and composition matrices WL and WR randomly such that without any training, representations for words and phrases are arbitrarily projected into the vector space .Page 3, “Recursive Neural Networks”

- vector space by listing the most probable n-grams for each political affiliation in Table 2.Page 7, “Where Compositionality Helps Detect Ideological Bias”

See all papers in Proc. ACL 2014 that mention vector space.

See all papers in Proc. ACL that mention vector space.

Back to top.