Article Structure

Abstract

Code-switched documents are common in social media, providing evidence for polylingual topic models to infer aligned topics across languages.

Introduction

Topic models (Blei et al., 2003) have become standard tools for analyzing document collections, and topic analyses are quite common for social media (Paul and Dredze, 2011; Zhao et al., 2011; Hong and Davison, 2010; Ramage et al., 2010; Eisenstein et al., 2010).

Code-Switching

Code-switched documents has received considerable attention in the NLP community.

Topics

LDA

- We present Code-Switched LDA (csLDA), which infers language specific topic distributions based on code-switched documents to facilitate multilingual corpus analysis.Page 1, “Abstract”

- We experiment on two code-switching corpora (English-Spanish Twitter data and English-Chinese Weibo data) and show that csLDA improves perpleXity over LDA , and learns semantically coherent aligned topics as judged by human annotators.Page 1, “Abstract”

- We call the resulting model Code-Switched LDA (csLDA).Page 3, “Code-Switching”

- 3.1 Inference Inference for csLDA follows directly from LDA .Page 3, “Code-Switching”

- Instead, we constructed a baseline from LDA run on the entire dataset (noPage 4, “Code-Switching”

- Since csLDA duplicates topic distributions (2' x L) we used twice as many topics for LDA .Page 4, “Code-Switching”

- Figure 3 shows test perpleXity for varying ’2' and perpleXity for the best setting of csLDA (’2' =60) and LDA (’2' 2120).Page 4, “Code-Switching”

- The table lists both monolingual and code-switched test data; csLDA improves over LDA in almost every case, and across all values of ’2'.Page 4, “Code-Switching”

- The background distribution (-bg) has mixed results for LDA , whereas for csLDA it shows consistent improvement.Page 4, “Code-Switching”

- LDA may learn comparable topics in different languages but gives no explicit alignments.Page 4, “Code-Switching”

- We create alignments by classifying each LDA topic by language using the KL-divergence between the topic’s words distribution and a word distribution for the English/foreign language inferred from the monolingual documents.Page 4, “Code-Switching”

See all papers in Proc. ACL 2014 that mention LDA.

See all papers in Proc. ACL that mention LDA.

Back to top.

social media

- Code-switched documents are common in social media , providing evidence for polylingual topic models to infer aligned topics across languages.Page 1, “Abstract”

- Topic models (Blei et al., 2003) have become standard tools for analyzing document collections, and topic analyses are quite common for social media (Paul and Dredze, 2011; Zhao et al., 2011; Hong and Davison, 2010; Ramage et al., 2010; Eisenstein et al., 2010).Page 1, “Introduction”

- In social media especially, there is a large diversity in terms of both the topic and language, necessitating the modeling of multiple languages simultaneously.Page 1, “Introduction”

- However, the ever changing vocabulary and topics of social media (Eisenstein, 2013) make finding suitable comparable corpora difficult.Page 1, “Introduction”

- Standard techniques — such as relying on machine translation parallel corpora or comparable documents extracted from Wikipedia in different languages — fail to capture the specific terminology of social media .Page 1, “Introduction”

- The result: an inability to train polylingual models on social media .Page 1, “Introduction”

- In this paper, we offer a solution: utilize code-switched social media to discover correlations across languages.Page 1, “Introduction”

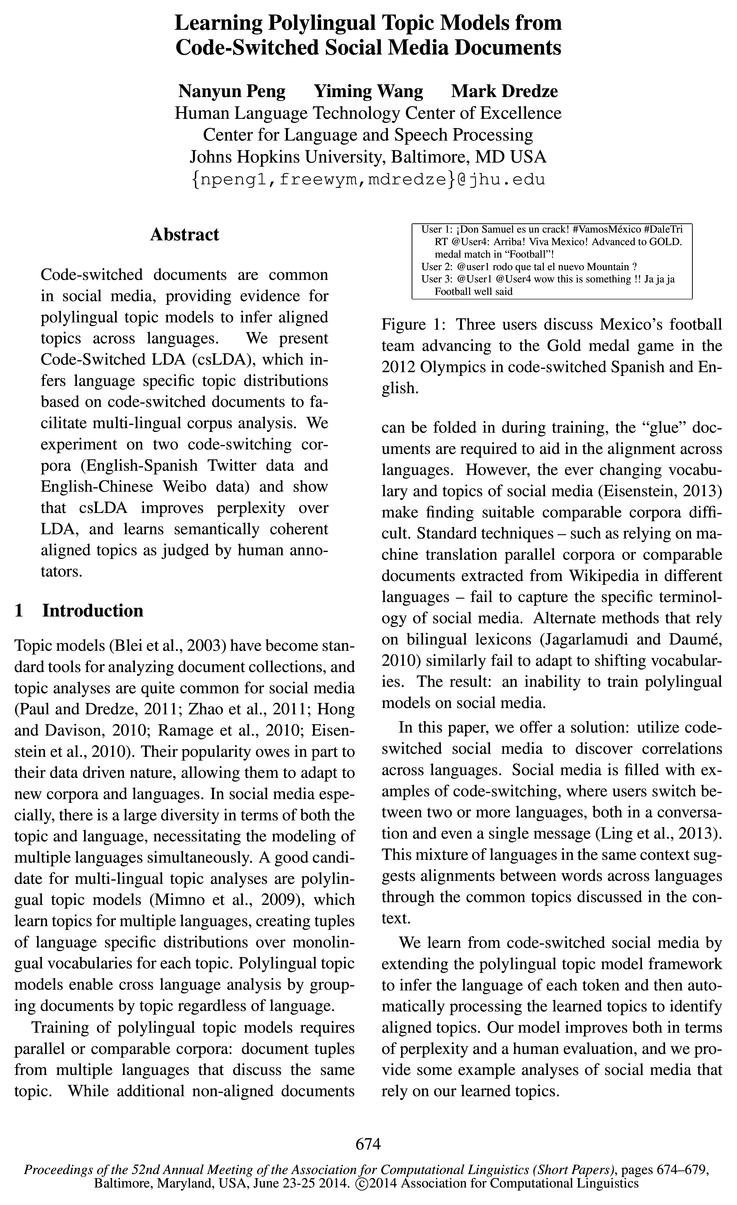

- Social media is filled with examples of code-switching, where users switch between two or more languages, both in a conversation and even a single message (Ling et al., 2013).Page 1, “Introduction”

- We learn from code-switched social media by extending the polylingual topic model framework to infer the language of each token and then automatically processing the learned topics to identify aligned topics.Page 1, “Introduction”

- Our model improves both in terms of perplexity and a human evaluation, and we provide some example analyses of social media that rely on our learned topics.Page 1, “Introduction”

- Code-switching specifically in social media has also received some recent attention.Page 2, “Code-Switching”

See all papers in Proc. ACL 2014 that mention social media.

See all papers in Proc. ACL that mention social media.

Back to top.

topic model

- Code-switched documents are common in social media, providing evidence for polylingual topic models to infer aligned topics across languages.Page 1, “Abstract”

- Topic models (Blei et al., 2003) have become standard tools for analyzing document collections, and topic analyses are quite common for social media (Paul and Dredze, 2011; Zhao et al., 2011; Hong and Davison, 2010; Ramage et al., 2010; Eisenstein et al., 2010).Page 1, “Introduction”

- A good candidate for multilingual topic analyses are polylingual topic models (Mimno et al., 2009), which learn topics for multiple languages, creating tuples of language specific distributions over monolingual vocabularies for each topic.Page 1, “Introduction”

- Polylingual topic models enable cross language analysis by grouping documents by topic regardless of language.Page 1, “Introduction”

- Training of polylingual topic models requires parallel or comparable corpora: document tuples from multiple languages that discuss the same topic.Page 1, “Introduction”

- We learn from code-switched social media by extending the polylingual topic model framework to infer the language of each token and then automatically processing the learned topics to identify aligned topics.Page 1, “Introduction”

- By collecting the entire conversation into a single document we provide the topic model with additional content.Page 2, “Code-Switching”

- To train a polylingual topic model on social media, we make two modifications to the model of Mimno et al.Page 2, “Code-Switching”

- First, polylingual topic models require parallel or comparable corpora in which each document has an assigned language.Page 2, “Code-Switching”

- To address the lack of available LID systems, we add a per-token latent language variable to the polylingual topic model .Page 2, “Code-Switching”

- Second, polylingual topic models assume the aligned topics are from parallel or comparable corpora, which implicitly assumes that a topics popularity is balanced across languages.Page 2, “Code-Switching”

See all papers in Proc. ACL 2014 that mention topic model.

See all papers in Proc. ACL that mention topic model.

Back to top.

Chinese-English

- In this work we consider two types of code-switched documents: single messages and conversations, and two language pairs: Chinese-English and Spanish-English.Page 2, “Code-Switching”

- An example of a Chinese-English code-switched messages is given by Ling et al.Page 2, “Code-Switching”

- We used two datasets: a Sina Weibo Chinese-English corpus (Ling et al., 2013) and a Spanish-English Twitter corpus.Page 4, “Code-Switching”

- (2013) extracted over 1m Chinese-English parallel segments from Sina Weibo, which are code-switched messages.Page 4, “Code-Switching”

See all papers in Proc. ACL 2014 that mention Chinese-English.

See all papers in Proc. ACL that mention Chinese-English.

Back to top.

topic distributions

- We present Code-Switched LDA (csLDA), which infers language specific topic distributions based on code-switched documents to facilitate multilingual corpus analysis.Page 1, “Abstract”

- Our solution is to automatically identify aligned polylingual topics after learning by examining a topic’s distribution across code-switched documents.Page 2, “Code-Switching”

- 0 Draw a topic distribution 6d ~ Dir(oz) 0 Draw a language distribution deDiT (7) o For each token i E d: 0 Draw a topic 21- ~ 6d 0 Draw a language I, ~ wd 0 Draw a word 21),- N of, For monolingual documents, we fix I, to the LID tag for all tokens.Page 3, “Code-Switching”

- Since csLDA duplicates topic distributions (2' x L) we used twice as many topics for LDA.Page 4, “Code-Switching”

See all papers in Proc. ACL 2014 that mention topic distributions.

See all papers in Proc. ACL that mention topic distributions.

Back to top.

hyperparameters

- We use asymmetric Dirichlet priors (Wallach et al., 2009), and let the optimization process learn the hyperparameters .Page 3, “Code-Switching”

- We optimize the hyperparameters 04, 6, 7 and 6 by interleaving sampling iterations with a Newton-Raphson update to obtain the MLE estimate for the hyperparameters .Page 3, “Code-Switching”

- Where H is the Hessian matrix and 3—2 is the gradient of the likelihood function with respect to the optimizing hyperparameter .Page 3, “Code-Switching”

See all papers in Proc. ACL 2014 that mention hyperparameters.

See all papers in Proc. ACL that mention hyperparameters.

Back to top.