Article Structure

Abstract

Distant supervision usually utilizes only unlabeled data and existing knowledge bases to learn relation extraction models.

Introduction

Relation extraction is the task of tagging semantic relations between pairs of entities from free text.

The Challenge

Simply taking the union of the hand-labeled data and the corpus labeled by distant supervision is not effective since hand-labeled data will be swamped by a larger amount of distantly labeled data.

Guided DS

Our goal is to jointly model human-labeled ground truth and structured data from a knowledge base in distant supervision.

Training

We use a hard expectation maximization algorithm to train the model.

Available at http://nlp. stanford.edu/software/mimlre. shtml.

Precision

Conclusions and Future Work

We show that relation extractors trained with distant supervision can benefit significantly from a small number of human labeled examples.

Topics

distant supervision

- Distant supervision usually utilizes only unlabeled data and existing knowledge bases to learn relation extraction models.Page 1, “Abstract”

- In this paper, we demonstrate how a state-of-the-art multi-instance multi-label model can be modified to make use of these reliable sentence-level labels in addition to the relation-level distant supervision from a database.Page 1, “Abstract”

- Recently, distant supervision has emerged as an important technique for relation extraction and has attracted increasing attention because of its effective use of readily available databases (Mintz et al., 2009; Bunescu and Mooney, 2007; Snyder and Barzilay, 2007; Wu and Weld, 2007).Page 1, “Introduction”

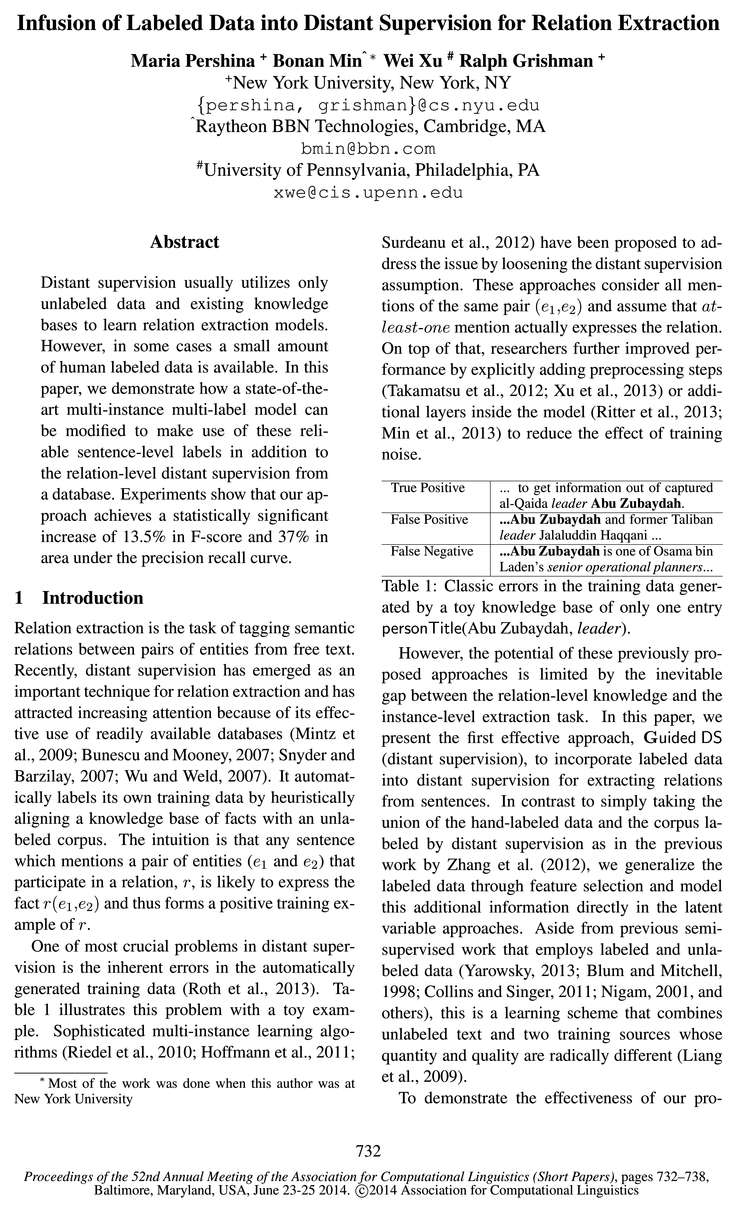

- One of most crucial problems in distant supervision is the inherent errors in the automatically generated training data (Roth et al., 2013).Page 1, “Introduction”

- Surdeanu et al., 2012) have been proposed to address the issue by loosening the distant supervision assumption.Page 1, “Introduction”

- In this paper, we present the first effective approach, Guided DS (distant supervision), to incorporate labeled data into distant supervision for extracting relations from sentences.Page 1, “Introduction”

- In contrast to simply taking the union of the hand-labeled data and the corpus labeled by distant supervision as in the previous work by Zhang et al.Page 1, “Introduction”

- posed approach, we extend MIML (Surdeanu et al., 2012), a state-of-the-art distant supervision model and show a significant improvement of 13.5% in F-score on the relation extraction benchmark TAC-KBP (Ji and Grishman, 2011) dataset.Page 2, “Introduction”

- Simply taking the union of the hand-labeled data and the corpus labeled by distant supervision is not effective since hand-labeled data will be swamped by a larger amount of distantly labeled data.Page 2, “The Challenge”

- Our goal is to jointly model human-labeled ground truth and structured data from a knowledge base in distant supervision .Page 3, “Guided DS”

- We also compare Guided DS with three state-of-the-art models: 1) MultiR and 2) MIML are two distant supervision models that support multi-instance learning and overlapping relations; 3) Mintz++ is a single-instance learning algorithm for distant supervision .Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

See all papers in Proc. ACL 2014 that mention distant supervision.

See all papers in Proc. ACL that mention distant supervision.

Back to top.

labeled data

- However, in some cases a small amount of human labeled data is available.Page 1, “Abstract”

- In this paper, we present the first effective approach, Guided DS (distant supervision), to incorporate labeled data into distant supervision for extracting relations from sentences.Page 1, “Introduction”

- (2012), we generalize the labeled data through feature selection and model this additional information directly in the latent variable approaches.Page 1, “Introduction”

- While prior work employed tens of thousands of human labeled examples (Zhang et al., 2012) and only got a 6.5% increase in F-score over a logistic regression baseline, our approach uses much less labeled data (about 1/8) but achieves much higher improvement on performance over stronger baselines.Page 2, “Introduction”

- Simply taking the union of the hand-labeled data and the corpus labeled by distant supervision is not effective since hand-labeled data will be swamped by a larger amount of distantly labeled data .Page 2, “The Challenge”

- An effective approach must recognize that the hand-labeled data is more reliable than the automatically labeled data and so must take precedence in cases of conflict.Page 2, “The Challenge”

- Instead we propose to perform feature selection to generalize human labeled data into training guidelines, and integrate them into latent variable model.Page 2, “The Challenge”

- Upsam—pling the labeled data did not improve the performance either.Page 4, “Training”

- Thus, our approach outperforms state-of-the-art model for relation extraction using much less labeled data that was used by Zhang et al., (2012) to outper-Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

See all papers in Proc. ACL 2014 that mention labeled data.

See all papers in Proc. ACL that mention labeled data.

Back to top.

relation extraction

- Distant supervision usually utilizes only unlabeled data and existing knowledge bases to learn relation extraction models.Page 1, “Abstract”

- Relation extraction is the task of tagging semantic relations between pairs of entities from free text.Page 1, “Introduction”

- Recently, distant supervision has emerged as an important technique for relation extraction and has attracted increasing attention because of its effective use of readily available databases (Mintz et al., 2009; Bunescu and Mooney, 2007; Snyder and Barzilay, 2007; Wu and Weld, 2007).Page 1, “Introduction”

- 1t Supervision for Relation ExtractionPage 1, “Introduction”

- posed approach, we extend MIML (Surdeanu et al., 2012), a state-of-the-art distant supervision model and show a significant improvement of 13.5% in F-score on the relation extraction benchmark TAC-KBP (Ji and Grishman, 2011) dataset.Page 2, “Introduction”

- Conflicts cannot be limited to those cases where all the features in two examples are the same; this would almost never occur, because of the dozens of features used by a typical relation extractor (Zhou et al., 2005).Page 2, “The Challenge”

- Thus, our approach outperforms state-of-the-art model for relation extraction using much less labeled data that was used by Zhang et al., (2012) to outper-Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

- We show that relation extractors trained with distant supervision can benefit significantly from a small number of human labeled examples.Page 5, “Conclusions and Future Work”

- We show how to incorporate these guidelines into an existing state-of-art model for relation extraction .Page 5, “Conclusions and Future Work”

See all papers in Proc. ACL 2014 that mention relation extraction.

See all papers in Proc. ACL that mention relation extraction.

Back to top.

F-score

- Experiments show that our approach achieves a statistically significant increase of 13.5% in F-score and 37% in area under the precision recall curve.Page 1, “Abstract”

- posed approach, we extend MIML (Surdeanu et al., 2012), a state-of-the-art distant supervision model and show a significant improvement of 13.5% in F-score on the relation extraction benchmark TAC-KBP (Ji and Grishman, 2011) dataset.Page 2, “Introduction”

- While prior work employed tens of thousands of human labeled examples (Zhang et al., 2012) and only got a 6.5% increase in F-score over a logistic regression baseline, our approach uses much less labeled data (about 1/8) but achieves much higher improvement on performance over stronger baselines.Page 2, “Introduction”

- Training MIML on a simple fusion of distantly-labeled and human-labeled datasets does not improve the maximum F-score since this hand-labeled data is swamped by a much larger amount of distant-supervised data of much lower quality.Page 4, “Training”

- Figure 2 shows that our model consistently outperforms all six algorithms at almost all recall levels and improves the maximum F-score by more than 13.5% relative to M | M L (from 28.35% to 32.19%) as well as increases the area under precision-recall curve by more than 37% (from 11.74 to 16.1).Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

- Performance of Guided DS also compares favorably with best scored hand-coded systems for a similar task such as Sun et al., (2011) system for KBP 2011, which reports an F-score of 25.7%.Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

See all papers in Proc. ACL 2014 that mention F-score.

See all papers in Proc. ACL that mention F-score.

Back to top.

latent variable

- (2012), we generalize the labeled data through feature selection and model this additional information directly in the latent variable approaches.Page 1, “Introduction”

- Instead we propose to perform feature selection to generalize human labeled data into training guidelines, and integrate them into latent variable model.Page 2, “The Challenge”

- We introduce a set of latent variables hi which model human ground truth for each mention in the ith bag and take precedence over the current model assignment zi.Page 3, “Guided DS”

- o ZijER U NR: a latent variable that denotes the relation of the jth mention in the ith bagPage 3, “Guided DS”

- 0 hij E R U NR: a latent variable that denotes the refined relation of the mention xijPage 3, “Guided DS”

See all papers in Proc. ACL 2014 that mention latent variable.

See all papers in Proc. ACL that mention latent variable.

Back to top.

knowledge base

- Distant supervision usually utilizes only unlabeled data and existing knowledge bases to learn relation extraction models.Page 1, “Abstract”

- It automatically labels its own training data by heuristically aligning a knowledge base of facts with an unlabeled corpus.Page 1, “Introduction”

- Table 1: Classic errors in the training data generated by a toy knowledge base of only one entry personTit|e(Abu Zubaydah, leader).Page 1, “Introduction”

- Our goal is to jointly model human-labeled ground truth and structured data from a knowledge base in distant supervision.Page 3, “Guided DS”

See all papers in Proc. ACL 2014 that mention knowledge base.

See all papers in Proc. ACL that mention knowledge base.

Back to top.

MaxEnt

- We experimentally tested alternative feature sets by building supervised Maximum Entropy ( MaxEnt ) models using the hand-labeled data (Table 3), and selected an effective combination of three features from the full feature set used by Surdeanu et al., (2011):Page 2, “The Challenge”

- Table 3: Performance of a MaxEnt , trained on hand-labeled data using all features (Surdeanu et al., 2011) vs using a subset of two (types of entities, dependency path), or three (adding a span word) features, and evaluated on the test set.Page 2, “The Challenge”

- —l— Guided DS Semi—MIML —.— DS+upsampling —'— MaxEntPage 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

- Our baselines: 1) MaXEnt is a supervised maximum entropy baseline trained on a human-labeled data; 2) DS+upsamp|ing is an upsampling experiment, where MIML was trained on a mix of a distantly-labeled and human-labeled data; 3) Semi-MIML is a recent semi-supervised extension.Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

See all papers in Proc. ACL 2014 that mention MaxEnt.

See all papers in Proc. ACL that mention MaxEnt.

Back to top.

dependency path

- Each guideline g={gi|i=1,2,3} consists of a pair of semantic types, a dependency path , and optionally a span word and is associated with a particular relation r(g).Page 2, “The Challenge”

- Table 3: Performance of a MaxEnt, trained on hand-labeled data using all features (Surdeanu et al., 2011) vs using a subset of two (types of entities, dependency path ), or three (adding a span word) features, and evaluated on the test set.Page 2, “The Challenge”

- entity types, a dependency path and maybe a span word, if g has one.Page 3, “Guided DS”

See all papers in Proc. ACL 2014 that mention dependency path.

See all papers in Proc. ACL that mention dependency path.

Back to top.

ground truth

- Our goal is to jointly model human-labeled ground truth and structured data from a knowledge base in distant supervision.Page 3, “Guided DS”

- The input to the model consists of (l) distantly supervised data, represented as a list of n bags1 with a vector yi of binary gold-standard labels, either Positive(P) or N egative(N ) for each relation TER; (2) generalized human-labeled ground truth , represented as a set G of feature conjunctions g={gi|i=l,2,3} associated with a unique relation r(g).Page 3, “Guided DS”

- We introduce a set of latent variables hi which model human ground truth for each mention in the ith bag and take precedence over the current model assignment zi.Page 3, “Guided DS”

See all papers in Proc. ACL 2014 that mention ground truth.

See all papers in Proc. ACL that mention ground truth.

Back to top.

logistic regression

- While prior work employed tens of thousands of human labeled examples (Zhang et al., 2012) and only got a 6.5% increase in F-score over a logistic regression baseline, our approach uses much less labeled data (about 1/8) but achieves much higher improvement on performance over stronger baselines.Page 2, “Introduction”

- 2All classifiers are implemented using L2-regularized logistic regression with Stanford CoreNLP package.Page 3, “Training”

- form logistic regression baseline.Page 5, “Available at http://nlp. stanford.edu/software/mimlre. shtml.”

See all papers in Proc. ACL 2014 that mention logistic regression.

See all papers in Proc. ACL that mention logistic regression.

Back to top.