Article Structure

Abstract

Recent work on Chinese analysis has led to large-scale annotations of the internal structures of words, enabling character-level analysis of Chinese syntactic structures.

Introduction

As a lightweight formalism offering syntactic information to downstream applications such as SMT, the dependency grammar has received increasing interest in the syntax parsing community (McDonald et al., 2005; Nivre and Nilsson, 2005; Carreras et al., 2006; Duan et al., 2007; Koo and Collins, 2010; Zhang and Clark, 2008; Nivre, 2008; Bohnet, 2010; Zhang and Nivre, 2011; Choi and McCallum, 2013).

Character-Level Dependency Tree

Character-level dependencies were first proposed by Zhao (2009).

Topics

dependency parsing

- In this paper, we investigate the problem of character-level Chinese dependency parsing , building dependency trees over characters.Page 1, “Abstract”

- Character-level information can benefit downstream applications by offering flexible granularities for word segmentation while improving word-level dependency parsing accuracies.Page 1, “Abstract”

- We present novel adaptations of two major shift-reduce dependency parsing algorithms to character-level parsing.Page 1, “Abstract”

- Such annotations enable dependency parsing on the character level, building dependency trees over Chinese characters.Page 1, “Introduction”

- Character-level dependency parsing is interesting in at least two aspects.Page 1, “Introduction”

- In this paper, we make an investigation of character-level Chinese dependency parsing using Zhang et al.Page 2, “Introduction”

- There are two dominant transition-based dependency parsing systems, namely the arc-standard and the arc-eager parsers (Nivre, 2008).Page 2, “Introduction”

- We study both algorithms for character-level dependency parsing in order to make a comprehensive investigation.Page 2, “Introduction”

- For direct comparison with word-based parsers, we incorporate the traditional word segmentation, POS-tagging and dependency parsing stages in our joint parsing models.Page 2, “Introduction”

- Experimental results show that the character-level dependency parsing models outperform the word-based methods on all the data sets.Page 2, “Introduction”

- We differentiate intra-word dependencies and inter-word dependencies by the arc type, so that our work can be compared with conventional word segmentation, POS-tagging and dependency parsing pipelines under a canonical segmentation standard.Page 2, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

word segmentation

- Character-level information can benefit downstream applications by offering flexible granularities for word segmentation while improving word-level dependency parsing accuracies.Page 1, “Abstract”

- Chinese dependency trees were conventionally defined over words (Chang et al., 2009; Li et al., 2012), requiring word segmentation and POS-tagging as preprocessing steps.Page 1, “Introduction”

- First, character-level trees circumvent the issue that no universal standard exists for Chinese word segmentation .Page 1, “Introduction”

- In the well-known Chinese word segmentation bakeoff tasks, for example, different segmentation standards have been used by different data sets (Emerson, 2005).Page 1, “Introduction”

- For direct comparison with word-based parsers, we incorporate the traditional word segmentation , POS-tagging and dependency parsing stages in our joint parsing models.Page 2, “Introduction”

- We differentiate intra-word dependencies and inter-word dependencies by the arc type, so that our work can be compared with conventional word segmentation , POS-tagging and dependency parsing pipelines under a canonical segmentation standard.Page 2, “Character-Level Dependency Tree”

- The character-level dependency trees hold to a specific word segmentation standard, but are not limited to it.Page 2, “Character-Level Dependency Tree”

- A transition-based framework with global learning and beam search decoding (Zhang and Clark, 2011) has been applied to a number of natural language processing tasks, including word segmentation , PCS-tagging and syntactic parsing (Zhang and Clark, 2010; Huang and Sagae, 2010; Bohnet and Nivre, 2012; Zhang et al., 2013).Page 3, “Character-Level Dependency Tree”

- Both are crucial to well-established features for word segmentation , PCS-tagging and syntactic parsing.Page 3, “Character-Level Dependency Tree”

- The first two types are traditionally established features for the dependency parsing and joint word segmentation and POS-tagging tasks.Page 4, “Character-Level Dependency Tree”

- The word-level dependency parsing features are added when the inter-word actions are applied, and the features for joint word segmentation and POS-tagging are added when the actions PW, SHW and SHC are applied.Page 4, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention word segmentation.

See all papers in Proc. ACL that mention word segmentation.

Back to top.

word-level

- Character-level information can benefit downstream applications by offering flexible granularities for word segmentation while improving word-level dependency parsing accuracies.Page 1, “Abstract”

- Moreover, manually annotated intra-word dependencies can give improved word-level dependency accuracies than pseudo intra-word dependencies.Page 2, “Introduction”

- Inner-word dependencies can also bring benefits to parsing word-level dependencies.Page 2, “Character-Level Dependency Tree”

- When the internal structures of words are annotated, character-level dependency parsing can be treated as a special case of word-level dependency parsing, with “words” being “characters”.Page 3, “Character-Level Dependency Tree”

- The word-level dependency parsing features are added when the inter-word actions are applied, and the features for joint word segmentation and POS-tagging are added when the actions PW, SHW and SHC are applied.Page 4, “Character-Level Dependency Tree”

- The word-level dependency parsing features are triggered when the inter-word actions are applied, while the features of joint word segmentation and POS-tagging are added when the actions SHC, ARC and PW are applied.Page 5, “Character-Level Dependency Tree”

- The word-level features for dependency parsing are applied to intra-word dependency parsing as well, by using subwords to replace words.Page 5, “Character-Level Dependency Tree”

- The standard measures of word-level precision, recall and F1 score are used to evaluate word segmentation, PCS-tagging and dependency parsing, following Hatori et a1.Page 6, “Character-Level Dependency Tree”

- The real inter-word dependencies refer to the syntactic word-level dependencies by head-finding rules from CTB, while the pseudo inter-word dependencies refer to the word-level dependencies used by Zhao (2009) (zuwa - - f“ wn).Page 6, “Character-Level Dependency Tree”

- Tuning is conducted by maximizing word-level dependency accuracies.Page 7, “Character-Level Dependency Tree”

- We can see that both the two models achieve better accuracies on word-level dependencies with the novel word-structure features, while the features do not affect word-structure predication significantly.Page 7, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention word-level.

See all papers in Proc. ACL that mention word-level.

Back to top.

dependency tree

- In this paper, we investigate the problem of character-level Chinese dependency parsing, building dependency trees over characters.Page 1, “Abstract”

- Chinese dependency trees were conventionally defined over words (Chang et al., 2009; Li et al., 2012), requiring word segmentation and POS-tagging as preprocessing steps.Page 1, “Introduction”

- Such annotations enable dependency parsing on the character level, building dependency trees over Chinese characters.Page 1, “Introduction”

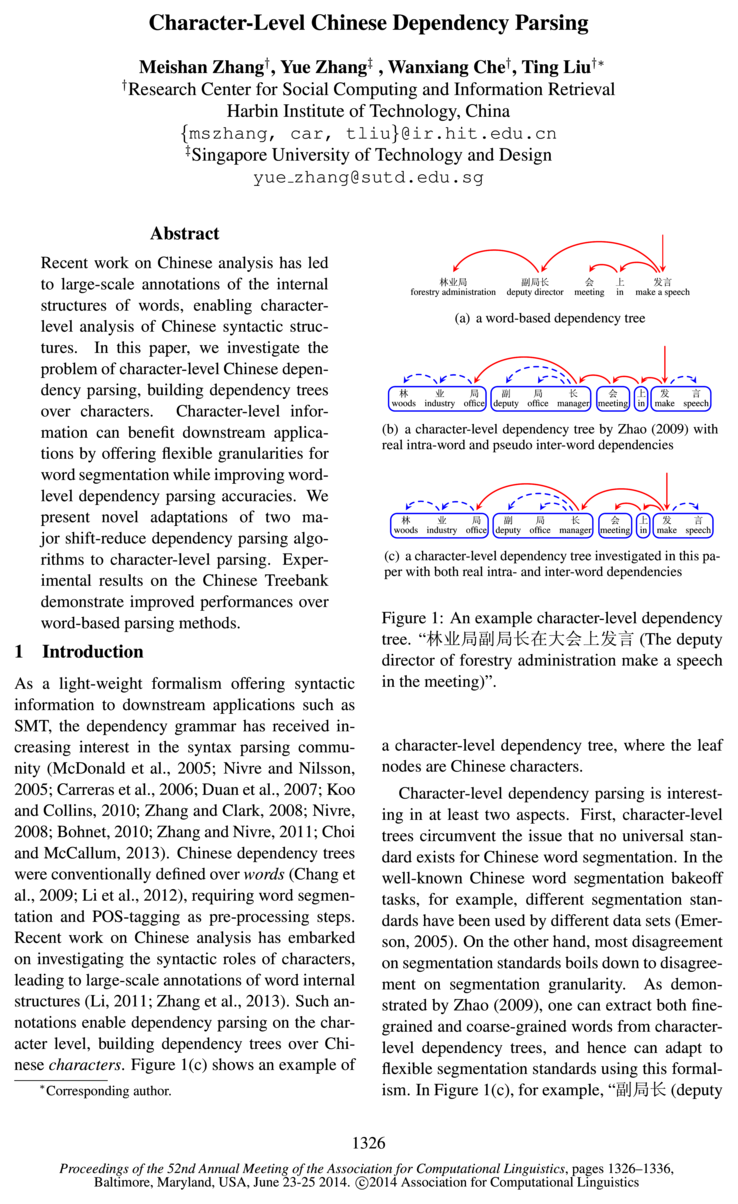

- (a) a word-based dependency treePage 1, “Introduction”

- (b) a character-level dependency tree by Zhao (2009) with real intra-word and pseudo inter-word dependenciesPage 1, “Introduction”

- (c) a character-level dependency tree investigated in this paper with both real intra- and inter-word dependenciesPage 1, “Introduction”

- Figure 1: An example character-level dependency tree .Page 1, “Introduction”

- a character-level dependency tree , where the leaf nodes are Chinese characters.Page 1, “Introduction”

- As demonstrated by Zhao (2009), one can extract both fine-grained and coarse-grained words from character-level dependency trees , and hence can adapt to flexible segmentation standards using this formalism.Page 1, “Introduction”

- In this formulation, a character-level dependency tree satisfies the same constraints as the traditional word-based dependency tree for Chinese, including proj ectiVity.Page 2, “Character-Level Dependency Tree”

- The character-level dependency trees hold to a specific word segmentation standard, but are not limited to it.Page 2, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention dependency tree.

See all papers in Proc. ACL that mention dependency tree.

Back to top.

parsing models

- For direct comparison with word-based parsers, we incorporate the traditional word segmentation, POS-tagging and dependency parsing stages in our joint parsing models .Page 2, “Introduction”

- Experimental results show that the character-level dependency parsing models outperform the word-based methods on all the data sets.Page 2, “Introduction”

- (2012) also use Zhang and Clark (2010)’s features, the arc-standard and arc-eager character-level dependency parsing models have the same features for joint word segmentation and PCS-tagging.Page 5, “Character-Level Dependency Tree”

- The first consists of a joint segmentation and PCS-tagging model (Zhang and Clark, 2010) and a word-based dependency parsing model using the arc-standard algorithm (Huang et al., 2009).Page 6, “Character-Level Dependency Tree”

- The second consists of the same joint segmentation and PCS-tagging model and a word-based dependency parsing model using the arc-eager algorithmPage 6, “Character-Level Dependency Tree”

- We study the following character-level dependency parsing models:Page 6, “Character-Level Dependency Tree”

- The results demonstrate that the character-level dependency parsing models are significantly better than the corresponding word-based pipeline models, for both the arc-standard and arc-eager systems.Page 7, “Character-Level Dependency Tree”

- To better understand the character-level parsing models , we conduct error analysis in this section.Page 8, “Character-Level Dependency Tree”

- accuracies in two aspects, including OOV, word length, POS tags and the parsing model .Page 8, “Character-Level Dependency Tree”

- 4.5.2 Parsing ModelPage 8, “Character-Level Dependency Tree”

- ing that the two parsing models can be complementary in parsing intra-word dependencies.Page 9, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention parsing models.

See all papers in Proc. ACL that mention parsing models.

Back to top.

POS tag

- system, each word is initialized by the action SHW with a POS tag , before being incrementally modified by a sequence of intra-word actions, and finally being completed by the action PW.Page 4, “Character-Level Dependency Tree”

- L and R denote the two elements over which the dependencies are built; the subscripts lcl and r01 denote the leftmost and rightmost children, respectively; the subscripts 102 and r02 denote the second leftmost and second rightmost children, respectively; w denotes the word; t denotes the POS tag ; 9 denotes the head character; ls_w and w denote the smallest left and right subwords respectively, as shown in Figure 2.Page 5, “Character-Level Dependency Tree”

- Since the first element of the queue can be shifted onto the stack by either SH or AR, it is more difficult to assign a POS tag to each word by using a single action.Page 5, “Character-Level Dependency Tree”

- The actions ALC and ARC are the same as ALW and ARW, except that they operate on characters, but the SHC operation has a parameter to denote the POS tag of a word.Page 5, “Character-Level Dependency Tree”

- Each word is initialized by the action SHC with a POS tag , and then incrementally changed a sequence of intra-word actions, before being finalized by the action PW.Page 5, “Character-Level Dependency Tree”

- The action SHC have a POS tag when shifting the first character of a word,but does not have such a parameter when shifting the next characters of a word.Page 5, “Character-Level Dependency Tree”

- For the action SHC with a POS tag to be valid, the first element in the deque must be a fullword node.Page 5, “Character-Level Dependency Tree”

- Different from the arc-standard model, at any stage we can choose either the action SHC with a POS tag to initialize a new word on the deque, or the inter-word actions on the stack.Page 5, “Character-Level Dependency Tree”

- accuracies in two aspects, including OOV, word length, POS tags and the parsing model.Page 8, “Character-Level Dependency Tree”

- From the above analysis in terms of OOV, word lengths and POS tags , we can see that the EAG (real, real) model and the STD (real, real) models behave similarly on word-structure accuracies.Page 8, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention POS tag.

See all papers in Proc. ACL that mention POS tag.

Back to top.

Chinese word

- First, character-level trees circumvent the issue that no universal standard exists for Chinese word segmentation.Page 1, “Introduction”

- In the well-known Chinese word segmentation bakeoff tasks, for example, different segmentation standards have been used by different data sets (Emerson, 2005).Page 1, “Introduction”

- The results demonstrate that the structures of Chinese words are not difficult to predict, and confirm the fact that Chinese word structures have some common syntactic patterns.Page 8, “Character-Level Dependency Tree”

- Zhao (2009) was the first to study character-level dependencies; they argue that since no consistent word boundaries exist over Chinese word segmentation, dependency-based representations of word structures serve as a good alternative for Chinese word segmentation.Page 9, “Character-Level Dependency Tree”

- (2012) proposed a joint model for Chinese word segmentation, POS-tagging and dependency parsing, studying the influence of joint model and character features for parsing, Their model is extended from the arc-standard transition-based model, and can be regarded as an alternative to the arc-standard model of our work when pseudo intra-word dependencies are used.Page 9, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention Chinese word.

See all papers in Proc. ACL that mention Chinese word.

Back to top.

Chinese word segmentation

- First, character-level trees circumvent the issue that no universal standard exists for Chinese word segmentation .Page 1, “Introduction”

- In the well-known Chinese word segmentation bakeoff tasks, for example, different segmentation standards have been used by different data sets (Emerson, 2005).Page 1, “Introduction”

- Zhao (2009) was the first to study character-level dependencies; they argue that since no consistent word boundaries exist over Chinese word segmentation, dependency-based representations of word structures serve as a good alternative for Chinese word segmentation .Page 9, “Character-Level Dependency Tree”

- (2012) proposed a joint model for Chinese word segmentation , POS-tagging and dependency parsing, studying the influence of joint model and character features for parsing, Their model is extended from the arc-standard transition-based model, and can be regarded as an alternative to the arc-standard model of our work when pseudo intra-word dependencies are used.Page 9, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention Chinese word segmentation.

See all papers in Proc. ACL that mention Chinese word segmentation.

Back to top.

syntactic parsing

- Second, word internal structures can also be useful for syntactic parsing .Page 2, “Introduction”

- A transition-based framework with global learning and beam search decoding (Zhang and Clark, 2011) has been applied to a number of natural language processing tasks, including word segmentation, PCS-tagging and syntactic parsing (Zhang and Clark, 2010; Huang and Sagae, 2010; Bohnet and Nivre, 2012; Zhang et al., 2013).Page 3, “Character-Level Dependency Tree”

- Both are crucial to well-established features for word segmentation, PCS-tagging and syntactic parsing .Page 3, “Character-Level Dependency Tree”

- (2013) was the first to perform Chinese syntactic parsing over characters.Page 9, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention syntactic parsing.

See all papers in Proc. ACL that mention syntactic parsing.

Back to top.

Feature templates

- Feature templatesPage 5, “Character-Level Dependency Tree”

- Table 1: Feature templates encoding intra-word dependencies.Page 5, “Character-Level Dependency Tree”

- It adjusts the weights of segmentation and POS-tagging features, because the number of feature templates is much less for the two tasks than for parsing.Page 7, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention Feature templates.

See all papers in Proc. ACL that mention Feature templates.

Back to top.

joint model

- (2012) proposed a joint model for Chinese word segmentation, POS-tagging and dependency parsing, studying the influence of joint model and character features for parsing, Their model is extended from the arc-standard transition-based model, and can be regarded as an alternative to the arc-standard model of our work when pseudo intra-word dependencies are used.Page 9, “Character-Level Dependency Tree”

- (2012) investigate a joint model using pseudo intra-word dependencies.Page 9, “Character-Level Dependency Tree”

- To our knowledge, we are the first to apply the arc-eager system to joint models and achieve comparative performances to the arc-standard model.Page 9, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention joint model.

See all papers in Proc. ACL that mention joint model.

Back to top.

Treebank

- Experimental results on the Chinese Treebank demonstrate improved performances over word-based parsing methods.Page 1, “Abstract”

- Their results on the Chinese Treebank (CTB) showed that character-level constituent parsing can bring increased performances even with the pseudo word structures.Page 2, “Introduction”

- We use the Chinese Penn Treebank 5 .0, 6.0 and 7.0 to conduct the experiments, splitting the corpora into training, development and test sets according to previous work.Page 6, “Character-Level Dependency Tree”

See all papers in Proc. ACL 2014 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.