Article Structure

Abstract

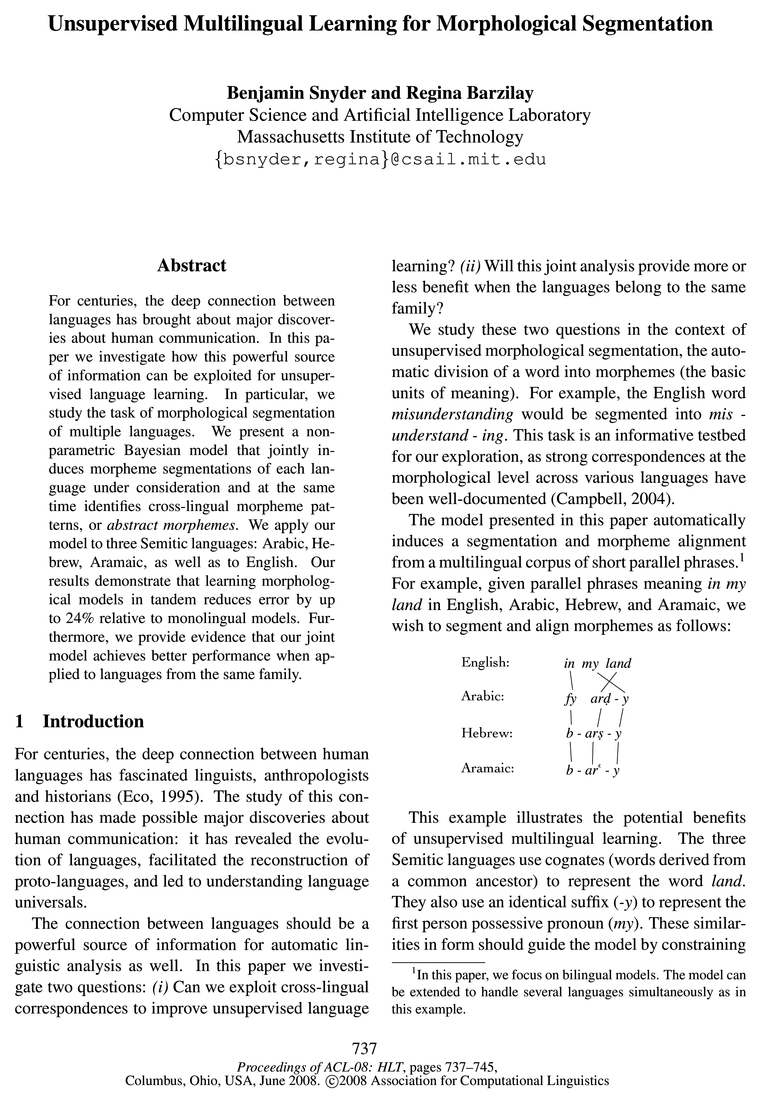

For centuries, the deep connection between languages has brought about major discoveries about human communication.

Introduction

For centuries, the deep connection between human languages has fascinated linguists, anthropologists and historians (Eco, 1995).

Related Work

Multilingual Language Learning Recently, the availability of parallel corpora has spurred research on multilingual analysis for a variety of tasks ranging from morphology to semantic role labeling (Yarowsky et al., 2000; Diab and Resnik, 2002; Xi and Hwa, 2005; Pado and Lapata, 2006).

Multilingual Morphological Segmentation

The underlying assumption of our work is that structural commonality across different languages is a powerful source of information for morphological analysis.

Model

Overview In order to simultaneously model probabilistic dependencies across languages as well as morpheme distributions within each language, we employ a hierarchical Bayesian model.2

Experimental SetUp

Morpheme Definition For the purpose of these experiments, we define morphemes to include conjunctions, prepositional and pronominal affixes, plural and dual suffixes, particles, definite articles, and roots.

Results

Table 1 shows the performance of the various automatic segmentation methods.

Conclusions and Future Work

We started out by posing two questions: (i) Can we exploit cross-lingual patterns to improve unsupervised analysis?

Topics

cross-lingual

- We present a nonparametric Bayesian model that jointly induces morpheme segmentations of each language under consideration and at the same time identifies cross-lingual morpheme patterns, or abstract morphemes.Page 1, “Abstract”

- In this paper we investigate two questions: (i) Can we exploit cross-lingual correspondences to improve unsupervised languagePage 1, “Introduction”

- Our results indicate that cross-lingual patterns can indeed be exploited successfully for the task of unsupervised morphological segmentation.Page 2, “Introduction”

- In the following section, we describe a model that can model both generic cross-lingual patterns (fy and 19-), as well as cognates between related languages (ktb for Hebrew and Arabic).Page 3, “Multilingual Morphological Segmentation”

- The model is fully unsupervised and is driven by a preference for stable and high frequency cross-lingual morpheme patterns.Page 3, “Model”

- Our model utilizes a Dirichlet process prior for each language, as well as for the cross-lingual links (abstract morphemes).Page 4, “Model”

- We started out by posing two questions: (i) Can we exploit cross-lingual patterns to improve unsupervised analysis?Page 8, “Conclusions and Future Work”

See all papers in Proc. ACL 2008 that mention cross-lingual.

See all papers in Proc. ACL that mention cross-lingual.

Back to top.

segmentations

- We present a nonparametric Bayesian model that jointly induces morpheme segmentations of each language under consideration and at the same time identifies cross-lingual morpheme patterns, or abstract morphemes.Page 1, “Abstract”

- the space of joint segmentations .Page 2, “Introduction”

- For each language in the pair, the model favors segmentations which yield high frequency morphemes.Page 2, “Introduction”

- For word 21) in language 5, we consider at once all possible segmentations , and for each segmentation all possible alignments.Page 6, “Model”

- We are thus considering at once: all possible segmentations of 212 along with all possible alignments involving morphemes in 21) with some subset of previously sampled language-]: morphemes.3Page 6, “Model”

- We obtained gold standard segmentations of the Arabic translation with a handcrafted Arabic morphological analyzer which utilizes manually constructed word lists and compatibility rules and is further trained on a large corpus of hand-annotated Arabic data (Habash and Ram-bow, 2005).Page 7, “Experimental SetUp”

- We don’t have gold standard segmentations for the English and Aramaic portions of the data, and thus restrict our evaluation to Hebrew and Arabic.Page 7, “Experimental SetUp”

See all papers in Proc. ACL 2008 that mention segmentations.

See all papers in Proc. ACL that mention segmentations.

Back to top.

Gibbs sampling

- In practice, we never deal with such distributions directly, but rather integrate over them during Gibbs sampling .Page 4, “Model”

- We achieve these aims by performing Gibbs sampling .Page 6, “Model”

- Sampling We follow (Neal, 1998) in the derivation of our blocked and collapsed Gibbs sampler .Page 6, “Model”

- Gibbs sampling starts by initializing all random variables to arbitrary starting values.Page 6, “Model”

- We also collapse our Gibbs sampler in the standard way, by using predictive posteriors marginalized over all possible draws from the Dirichlet processes (resulting in Chinese Restaurant Processes).Page 6, “Model”

- See (Neal, 1998) for general formulas for Gibbs sampling from distributions with Dirichlet process priors.Page 7, “Model”

See all papers in Proc. ACL 2008 that mention Gibbs sampling.

See all papers in Proc. ACL that mention Gibbs sampling.

Back to top.

language pairs

- When modeled in tandem, gains are observed for all language pairs , reducing relative error by as much as 24%.Page 2, “Introduction”

- Furthermore, our experiments show that both related and unrelated language pairs benefit from multilingual learning.Page 2, “Introduction”

- To obtain our corpus of short parallel phrases, we preprocessed each language pair using the Giza++ alignment toolkit.6 Given word alignments for each language pair , we extract a list of phrase pairs that form independent sets in the bipartite alignment graph.Page 7, “Experimental SetUp”

- However, once character-to-character phonetic correspondences are added as an abstract morpheme prior (final two rows), we find the performance of related language pairs outstrips English, reducing relative error over MONOLINGUAL by 10% and 24% for the Hebrew/Arabic pair.Page 8, “Results”

See all papers in Proc. ACL 2008 that mention language pairs.

See all papers in Proc. ACL that mention language pairs.

Back to top.

morphological analysis

- The underlying assumption of our work is that structural commonality across different languages is a powerful source of information for morphological analysis .Page 3, “Multilingual Morphological Segmentation”

- This Bible edition is augmented by gold standard morphological analysis (including segmentation) performed by biblical scholars.Page 7, “Experimental SetUp”

- We obtained gold standard segmentations of the Arabic translation with a handcrafted Arabic morphological analyzer which utilizes manually constructed word lists and compatibility rules and is further trained on a large corpus of hand-annotated Arabic data (Habash and Ram-bow, 2005).Page 7, “Experimental SetUp”

- The accuracy of this analyzer is reported to be 94% for full morphological analyses , and 98%-99% when part-of-speech tag accuracy is not included.Page 7, “Experimental SetUp”

See all papers in Proc. ACL 2008 that mention morphological analysis.

See all papers in Proc. ACL that mention morphological analysis.

Back to top.

parallel corpus

- Given a parallel corpus , the annotations are projected from this source language to its counterpart, and the resulting annotations are used for supervised training in the target language.Page 2, “Related Work”

- While their approach does not require a parallel corpus it does assume the availability of annotations in one language.Page 2, “Related Work”

- High-level Generative Story We have a parallel corpus of several thousand short phrases in the two languages 5 and .73.Page 4, “Model”

- Once A, E, and F have been drawn, we model our parallel corpus of short phrases as a series of independent draws from a phrase-pair generation model.Page 4, “Model”

See all papers in Proc. ACL 2008 that mention parallel corpus.

See all papers in Proc. ACL that mention parallel corpus.

Back to top.

part-of-speech

- An example of such a property is the distribution of part-of-speech bigrams.Page 2, “Related Work”

- Hana et al., (2004) demonstrate that adding such statistics from an annotated Czech corpus improves the performance of a Russian part-of-speech tagger over a fully unsupervised version.Page 2, “Related Work”

- The accuracy of this analyzer is reported to be 94% for full morphological analyses, and 98%-99% when part-of-speech tag accuracy is not included.Page 7, “Experimental SetUp”

- In the future, we hope to apply similar multilingual models to other core unsupervised analysis tasks, including part-of-speech tagging and grammar induction, and to further investigate the role that language relatedness plays in such models.Page 8, “Conclusions and Future Work”

See all papers in Proc. ACL 2008 that mention part-of-speech.

See all papers in Proc. ACL that mention part-of-speech.

Back to top.

gold standard

- This Bible edition is augmented by gold standard morphological analysis (including segmentation) performed by biblical scholars.Page 7, “Experimental SetUp”

- We obtained gold standard segmentations of the Arabic translation with a handcrafted Arabic morphological analyzer which utilizes manually constructed word lists and compatibility rules and is further trained on a large corpus of hand-annotated Arabic data (Habash and Ram-bow, 2005).Page 7, “Experimental SetUp”

- We don’t have gold standard segmentations for the English and Aramaic portions of the data, and thus restrict our evaluation to Hebrew and Arabic.Page 7, “Experimental SetUp”

See all papers in Proc. ACL 2008 that mention gold standard.

See all papers in Proc. ACL that mention gold standard.

Back to top.

part-of-speech tag

- Hana et al., (2004) demonstrate that adding such statistics from an annotated Czech corpus improves the performance of a Russian part-of-speech tagger over a fully unsupervised version.Page 2, “Related Work”

- The accuracy of this analyzer is reported to be 94% for full morphological analyses, and 98%-99% when part-of-speech tag accuracy is not included.Page 7, “Experimental SetUp”

- In the future, we hope to apply similar multilingual models to other core unsupervised analysis tasks, including part-of-speech tagging and grammar induction, and to further investigate the role that language relatedness plays in such models.Page 8, “Conclusions and Future Work”

See all papers in Proc. ACL 2008 that mention part-of-speech tag.

See all papers in Proc. ACL that mention part-of-speech tag.

Back to top.

segmentation model

- Our segmentation model is based on the notion that stable recurring string patterns within words are indicative of morphemes.Page 3, “Model”

- We note that these single-language morpheme distributions also serve as monolingual segmentation models , and similar models have been successfully applied to the task of word boundary detection (Goldwater et al., 2006).Page 5, “Model”

- The probabilistic formulation of this model is close to our monolingual segmentation model , but it uses a greedy search specifically designed for the segmentation task.Page 8, “Experimental SetUp”

See all papers in Proc. ACL 2008 that mention segmentation model.

See all papers in Proc. ACL that mention segmentation model.

Back to top.