Article Structure

Abstract

Tree-to-string systems (and their forest-based extensions) have gained steady popularity thanks to their simplicity and efficiency, but there is a major limitation: they are unable to guarantee the grammaticality of the output, which is explicitly modeled in string-to-tree systems via target-side syntax.

Introduction

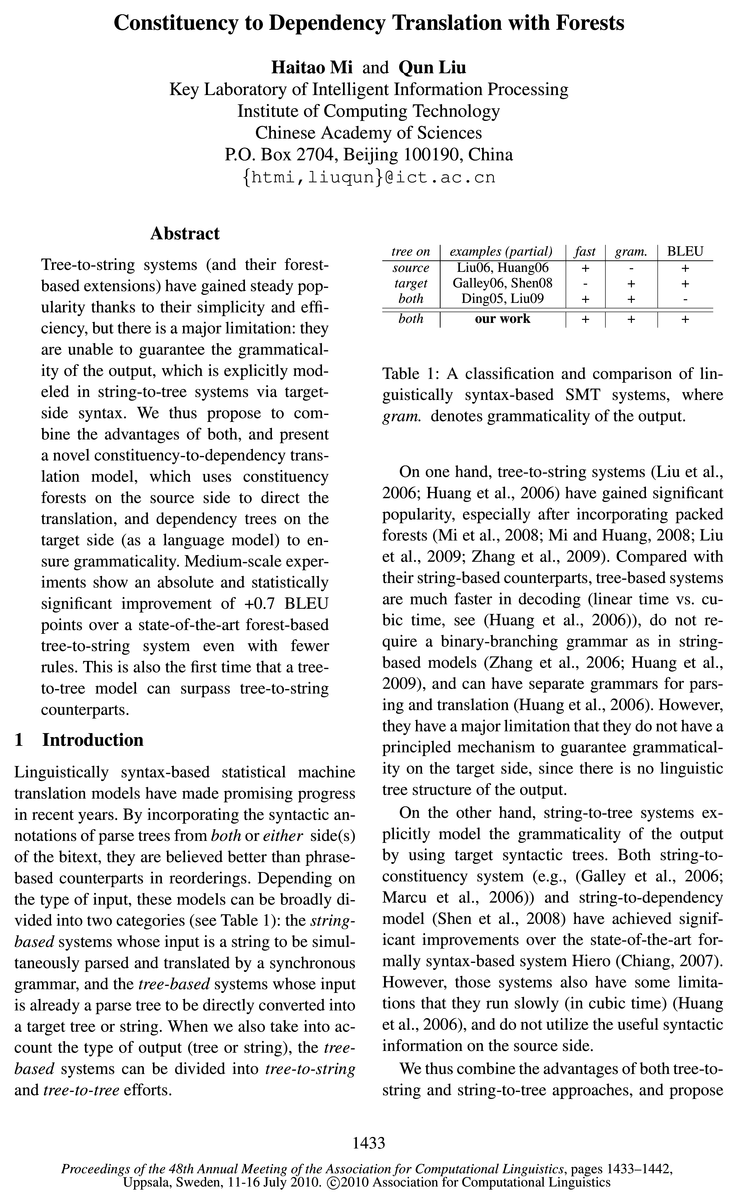

Linguistically syntax-based statistical machine translation models have made promising progress in recent years.

Model

Figure 1 shows a word-aligned source constituency forest FC and target dependency tree De, our constituency to dependency translation model can be formalized as:

Rule Extraction

We extract constituency to dependency rules from word-aligned source constituency forest and target dependency tree pairs (Figure 1).

Decoding

Given a source forest FC, the decoder searches for the best derivation 0* among the set of all possible derivations 0, each of which forms a source side constituent tree TC(0), a target side string 6(0), and

Experiments

5.1 Data Preparation

Related Work

The concept of packed forest has been used in machine translation for several years.

Conclusion and Future Work

In this paper, we presented a novel forest-based constituency-to-dependency translation model, which combines the advantages of both tree-to-string and string-to-tree systems, runs fast and guarantees grammaticality of the output.

Topics

dependency tree

- We thus propose to combine the advantages of both, and present a novel constituency-to-dependency translation model, which uses constituency forests on the source side to direct the translation, and dependency trees on the target side (as a language model) to ensure grammaticality.Page 1, “Abstract”

- a novel constituency-to-dependency model, which uses constituency forests on the source side to direct translation, and dependency trees on the target side to guarantee grammaticality of the output.Page 2, “Introduction”

- Our new constituency-to-dependency model (Section 2) extracts rules from word-aligned pairs of source constituency forests and target dependency trees (Section 3), and translates source constituency forests into target dependency trees with a set of features (Section 4).Page 2, “Introduction”

- Figure 1 shows a word-aligned source constituency forest FC and target dependency tree De, our constituency to dependency translation model can be formalized as:Page 2, “Model”

- 2.2 Dependency Trees on the Target SidePage 2, “Model”

- A dependency tree for a sentence represents each word and its syntactic dependents through directed arcs, as shown in the following examples.Page 2, “Model”

- The main advantage of a dependency tree is that it can explore the long distance dependency.Page 2, “Model”

- We use the lexicon dependency grammar (Hell-wig, 2006) to express a projective dependency tree .Page 3, “Model”

- Take the dependency trees above for example, they will be expressed:Page 3, “Model”

- More formally, a dependency tree is also a pair D6 = Gd 2 (Vd, H d).Page 3, “Model”

- Actually, both the constituency forest and the dependency tree can be formalized as a hypergraph G, a pair (V, H We use Gf and Gd to distinguish them.Page 3, “Model”

See all papers in Proc. ACL 2010 that mention dependency tree.

See all papers in Proc. ACL that mention dependency tree.

Back to top.

BLEU

- Medium-scale experiments show an absolute and statistically significant improvement of +0.7 BLEU points over a state-of-the-art forest-based tree-to-string system even with fewer rules.Page 1, “Abstract”

- BLEUPage 1, “Introduction”

- Medium data experiments (Section 5) show a statistically significant improvement of +0.7 BLEU points over a state-of-the-art forest-based tree-to-string system even with less translation rules, this is also the first time that a tree-to-tree model can surpass tree-to-string counterparts.Page 2, “Introduction”

- (2009), their forest-based constituency-to-constituency system achieves a comparable performance against Moses (Koehn et al., 2007), but a significant improvement of +3.6 BLEU points over the 1-best tree-based constituency-to-constituency system.Page 2, “Model”

- We use the standard minimum error-rate training (Och, 2003) to tune the feature weights to maximize the system’s BLEU score on development set.Page 7, “Experiments”

- The baseline system extracts 31.9M 625 rules, 77.9M 525 rules respectively and achieves a BLEU score of 34.17 on the test set3.Page 8, “Experiments”

- As shown in the third line in the column of BLEU score, the performance drops 1.7 BLEU points over baseline system due to the poorer rule coverage.Page 8, “Experiments”

- However, when we further use all 525 rules instead of 52d rules in our next experiment, it achieves a BLEU score of 34.03, which is very similar to the baseline system.Page 8, “Experiments”

- When we only use 62d and 52d rules, our system achieves a BLEU score of 33.25, which is lower than the baseline system in the first line.Page 8, “Experiments”

- This suggests that using dependency language model really improves the translation quality by less than 1 BLEU point.Page 8, “Experiments”

- With the help of the dependency language model, our new model achieves a significant improvement of +0.7 BLEU points over the forest 625 baseline system (p < 0.05, using the sign-test suggested byPage 8, “Experiments”

See all papers in Proc. ACL 2010 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

language model

- We thus propose to combine the advantages of both, and present a novel constituency-to-dependency translation model, which uses constituency forests on the source side to direct the translation, and dependency trees on the target side (as a language model ) to ensure grammaticality.Page 1, “Abstract”

- where the first two terms are translation and language model probabilities, 6(0) is the target string (English sentence) for derivation 0, the third and forth items are the dependency language model probabilities on the target side computed with words and POS tags separately, De (0) is the target dependency tree of 0, the fifth one is the parsing probability of the source side tree TC(0) 6 FC, the ill(0) is the penalty for the number of ill-formed dependency structures in 0, and the last two terms are derivation and translation length penalties, respectively.Page 6, “Decoding”

- For each node, we use the cube pruning technique (Chiang, 2007; Huang and Chiang, 2007) to produce partial hypotheses and compute all the feature scores including the dependency language model score (Section 4.1).Page 7, “Decoding”

- 4.1 Dependency Language Model ComputingPage 7, “Decoding”

- We compute the score of a dependency language model for a dependency tree D6 in the same way proposed by Shen et al.Page 7, “Decoding”

- We use the suffix “§” to distinguish the head word and child words in the dependency language model .Page 7, “Decoding”

- In order to alleviate the problem of data sparse, we also compute a dependency language model for POS tages over a dependency tree.Page 7, “Decoding”

- We calculate this dependency language model by simply replacing each 6, in equation 9 with its tag t(ei).Page 7, “Decoding”

- We also store the POS tag information for each word in dependency trees, and compute two different dependency language models for words and POS tags in dependency tree separately.Page 7, “Experiments”

- We use SRI Language Modeling Toolkit (Stolcke, 2002) to train a 4-gram language model with Kneser-Ney smoothing on the first 1/3 of the Xinhua portion of Giga-word corpus.Page 7, “Experiments”

- This suggests that using dependency language model really improves the translation quality by less than 1 BLEU point.Page 8, “Experiments”

See all papers in Proc. ACL 2010 that mention language model.

See all papers in Proc. ACL that mention language model.

Back to top.

baseline system

- Our baseline system is a state-of-the-art forest-based constituency-to-string model (Mi et al., 2008), or forest 625 for short, which translates a source forest into a target string by pattern-matching thePage 7, “Experiments”

- The baseline system extracts 31.9M 625 rules, 77.9M 525 rules respectively and achieves a BLEU score of 34.17 on the test set3.Page 8, “Experiments”

- At first, we investigate the influence of different rule sets on the performance of baseline system .Page 8, “Experiments”

- Then we convert 62d and 52d rules to 625 and 525 rules separately by removing the target-dependency structures and feed them into the baseline system .Page 8, “Experiments”

- As shown in the third line in the column of BLEU score, the performance drops 1.7 BLEU points over baseline system due to the poorer rule coverage.Page 8, “Experiments”

- However, when we further use all 525 rules instead of 52d rules in our next experiment, it achieves a BLEU score of 34.03, which is very similar to the baseline system .Page 8, “Experiments”

- When we only use 62d and 52d rules, our system achieves a BLEU score of 33.25, which is lower than the baseline system in the first line.Page 8, “Experiments”

- With the help of the dependency language model, our new model achieves a significant improvement of +0.7 BLEU points over the forest 625 baseline system (p < 0.05, using the sign-test suggested byPage 8, “Experiments”

- So our baseline system is much better than the BLEU score (30.6+1) of the constituency-to-constituency system and Moses.Page 8, “Experiments”

See all papers in Proc. ACL 2010 that mention baseline system.

See all papers in Proc. ACL that mention baseline system.

Back to top.

BLEU score

- We use the standard minimum error-rate training (Och, 2003) to tune the feature weights to maximize the system’s BLEU score on development set.Page 7, “Experiments”

- The baseline system extracts 31.9M 625 rules, 77.9M 525 rules respectively and achieves a BLEU score of 34.17 on the test set3.Page 8, “Experiments”

- As shown in the third line in the column of BLEU score , the performance drops 1.7 BLEU points over baseline system due to the poorer rule coverage.Page 8, “Experiments”

- However, when we further use all 525 rules instead of 52d rules in our next experiment, it achieves a BLEU score of 34.03, which is very similar to the baseline system.Page 8, “Experiments”

- When we only use 62d and 52d rules, our system achieves a BLEU score of 33.25, which is lower than the baseline system in the first line.Page 8, “Experiments”

- (2009), with a more larger training corpus (FBIS plus 30K) but no name entity translations (+1 BLEU points if it is used), their forest-based constituency-to-constituency model achieves a BLEU score of 30.6, which is similar to Moses (Koehn et al., 2007).Page 8, “Experiments”

- So our baseline system is much better than the BLEU score (30.6+1) of the constituency-to-constituency system and Moses.Page 8, “Experiments”

- Table 2: Statistics of different types of rules extracted on training corpus and the BLEU scores on the test set.Page 8, “Experiments”

- Using all constituency-to-dependency translation rules and bilingual phrases, our model achieves +0.7 points improvement in BLEU score significantly over a state-of-the-art forest-based tree-to-string system.Page 9, “Conclusion and Future Work”

See all papers in Proc. ACL 2010 that mention BLEU score.

See all papers in Proc. ACL that mention BLEU score.

Back to top.

BLEU points

- Medium-scale experiments show an absolute and statistically significant improvement of +0.7 BLEU points over a state-of-the-art forest-based tree-to-string system even with fewer rules.Page 1, “Abstract”

- Medium data experiments (Section 5) show a statistically significant improvement of +0.7 BLEU points over a state-of-the-art forest-based tree-to-string system even with less translation rules, this is also the first time that a tree-to-tree model can surpass tree-to-string counterparts.Page 2, “Introduction”

- (2009), their forest-based constituency-to-constituency system achieves a comparable performance against Moses (Koehn et al., 2007), but a significant improvement of +3.6 BLEU points over the 1-best tree-based constituency-to-constituency system.Page 2, “Model”

- As shown in the third line in the column of BLEU score, the performance drops 1.7 BLEU points over baseline system due to the poorer rule coverage.Page 8, “Experiments”

- This suggests that using dependency language model really improves the translation quality by less than 1 BLEU point .Page 8, “Experiments”

- With the help of the dependency language model, our new model achieves a significant improvement of +0.7 BLEU points over the forest 625 baseline system (p < 0.05, using the sign-test suggested byPage 8, “Experiments”

- (2009), with a more larger training corpus (FBIS plus 30K) but no name entity translations (+1 BLEU points if it is used), their forest-based constituency-to-constituency model achieves a BLEU score of 30.6, which is similar to Moses (Koehn et al., 2007).Page 8, “Experiments”

See all papers in Proc. ACL 2010 that mention BLEU points.

See all papers in Proc. ACL that mention BLEU points.

Back to top.

significant improvement

- Medium-scale experiments show an absolute and statistically significant improvement of +0.7 BLEU points over a state-of-the-art forest-based tree-to-string system even with fewer rules.Page 1, “Abstract”

- Both string-to-constituency system (e.g., (Galley et al., 2006; Marcu et al., 2006)) and string-to-dependency model (Shen et al., 2008) have achieved significant improvements over the state-of-the-art formally syntax-based system Hiero (Chiang, 2007).Page 1, “Introduction”

- Medium data experiments (Section 5) show a statistically significant improvement of +0.7 BLEU points over a state-of-the-art forest-based tree-to-string system even with less translation rules, this is also the first time that a tree-to-tree model can surpass tree-to-string counterparts.Page 2, “Introduction”

- (2009), their forest-based constituency-to-constituency system achieves a comparable performance against Moses (Koehn et al., 2007), but a significant improvement of +3.6 BLEU points over the 1-best tree-based constituency-to-constituency system.Page 2, “Model”

- With the help of the dependency language model, our new model achieves a significant improvement of +0.7 BLEU points over the forest 625 baseline system (p < 0.05, using the sign-test suggested byPage 8, “Experiments”

- This model shows a significant improvement over the state-of-the-art hierarchical phrase-based system (Chiang, 2005).Page 8, “Related Work”

See all papers in Proc. ACL 2010 that mention significant improvement.

See all papers in Proc. ACL that mention significant improvement.

Back to top.

POS tag

- where the first two terms are translation and language model probabilities, 6(0) is the target string (English sentence) for derivation 0, the third and forth items are the dependency language model probabilities on the target side computed with words and POS tags separately, De (0) is the target dependency tree of 0, the fifth one is the parsing probability of the source side tree TC(0) 6 FC, the ill(0) is the penalty for the number of ill-formed dependency structures in 0, and the last two terms are derivation and translation length penalties, respectively.Page 6, “Decoding”

- In order to alleviate the problem of data sparse, we also compute a dependency language model for POS tages over a dependency tree.Page 7, “Decoding”

- the POS tag information on the target side for each constituency-to-dependency rule.Page 7, “Decoding”

- So we will also generate a POS taged dependency tree simultaneously at the decoding time.Page 7, “Decoding”

- We also store the POS tag information for each word in dependency trees, and compute two different dependency language models for words and POS tags in dependency tree separately.Page 7, “Experiments”

See all papers in Proc. ACL 2010 that mention POS tag.

See all papers in Proc. ACL that mention POS tag.

Back to top.

translation model

- We thus propose to combine the advantages of both, and present a novel constituency-to-dependency translation model , which uses constituency forests on the source side to direct the translation, and dependency trees on the target side (as a language model) to ensure grammaticality.Page 1, “Abstract”

- Linguistically syntax-based statistical machine translation models have made promising progress in recent years.Page 1, “Introduction”

- Figure 1 shows a word-aligned source constituency forest FC and target dependency tree De, our constituency to dependency translation model can be formalized as:Page 2, “Model”

- (2009), we apply forest into a new constituency tree to dependency tree translation model rather than constituency tree-to-tree model.Page 8, “Related Work”

- In this paper, we presented a novel forest-based constituency-to-dependency translation model , which combines the advantages of both tree-to-string and string-to-tree systems, runs fast and guarantees grammaticality of the output.Page 9, “Conclusion and Future Work”

See all papers in Proc. ACL 2010 that mention translation model.

See all papers in Proc. ACL that mention translation model.

Back to top.

parse tree

- By incorporating the syntactic annotations of parse trees from both or either side(s) of the bitext, they are believed better than phrase-based counterparts in reorderings.Page 1, “Introduction”

- Depending on the type of input, these models can be broadly divided into two categories (see Table l): the string-based systems whose input is a string to be simultaneously parsed and translated by a synchronous grammar, and the tree-based systems whose input is already a parse tree to be directly converted into a target tree or string.Page 1, “Introduction”

- A constituency forest (in Figure 1 left) is a compact representation of all the derivations (i.e., parse trees ) for a given sentence under a context-free grammar (Billot and Lang, 1989).Page 2, “Model”

- The solid line in Figure 1 shows the best parse tree , while the dashed one shows the second best tree.Page 2, “Model”

See all papers in Proc. ACL 2010 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

phrase-based

- By incorporating the syntactic annotations of parse trees from both or either side(s) of the bitext, they are believed better than phrase-based counterparts in reorderings.Page 1, “Introduction”

- In contrast to conventional tree-to-tree approaches (Ding and Palmer, 2005; Quirk et al., 2005; Xiong et al., 2007; Zhang et al., 2007; Liu et al., 2009), which only make use of a single type of trees, our model is able to combine two types of trees, outperforming both phrase-based and tree-to-string systems.Page 2, “Introduction”

- Current tree-to-tree models (Xiong et al., 2007; Zhang et al., 2007; Liu et al., 2009) still have not outperformed the phrase-based system Moses (Koehn et al., 2007) significantly even with the help of forests.1Page 2, “Introduction”

- This model shows a significant improvement over the state-of-the-art hierarchical phrase-based system (Chiang, 2005).Page 8, “Related Work”

See all papers in Proc. ACL 2010 that mention phrase-based.

See all papers in Proc. ACL that mention phrase-based.

Back to top.

conditional probabilities

- We use fractional counts to compute three conditional probabilities for each rule, which will be used in the next section:Page 6, “Rule Extraction”

- The conditional probability P(o | TC) is decomposes into the product of rule probabilities:Page 6, “Decoding”

- where the first three are conditional probabilities based on fractional counts of rules defined in Section 3.4, and the last two are lexical probabilities.Page 6, “Decoding”

See all papers in Proc. ACL 2010 that mention conditional probabilities.

See all papers in Proc. ACL that mention conditional probabilities.

Back to top.

machine translation

- Linguistically syntax-based statistical machine translation models have made promising progress in recent years.Page 1, “Introduction”

- The concept of packed forest has been used in machine translation for several years.Page 8, “Related Work”

- (2008) and Mi and Huang (2008) use forest to direct translation and extract rules rather than l-best tree in order to weaken the influence of parsing errors, this is also the first time to use forest directly in machine translation .Page 8, “Related Work”

See all papers in Proc. ACL 2010 that mention machine translation.

See all papers in Proc. ACL that mention machine translation.

Back to top.

translation quality

- We evaluate the translation quality using the BLEU-4 metric (Pap-ineni et al., 2002), which is calculated by the script mteval-vllb.pl with its default setting which is case-insensitive matching of n-grams.Page 7, “Experiments”

- Those results suggest that restrictions on 625 rules won’t hurt the performance, but restrictions on 525 will hurt the translation quality badly.Page 8, “Experiments”

- This suggests that using dependency language model really improves the translation quality by less than 1 BLEU point.Page 8, “Experiments”

See all papers in Proc. ACL 2010 that mention translation quality.

See all papers in Proc. ACL that mention translation quality.

Back to top.