Article Structure

Abstract

We address the challenge of generating natural language abstractive summaries for spoken meetings in a domain-independent fashion.

Introduction

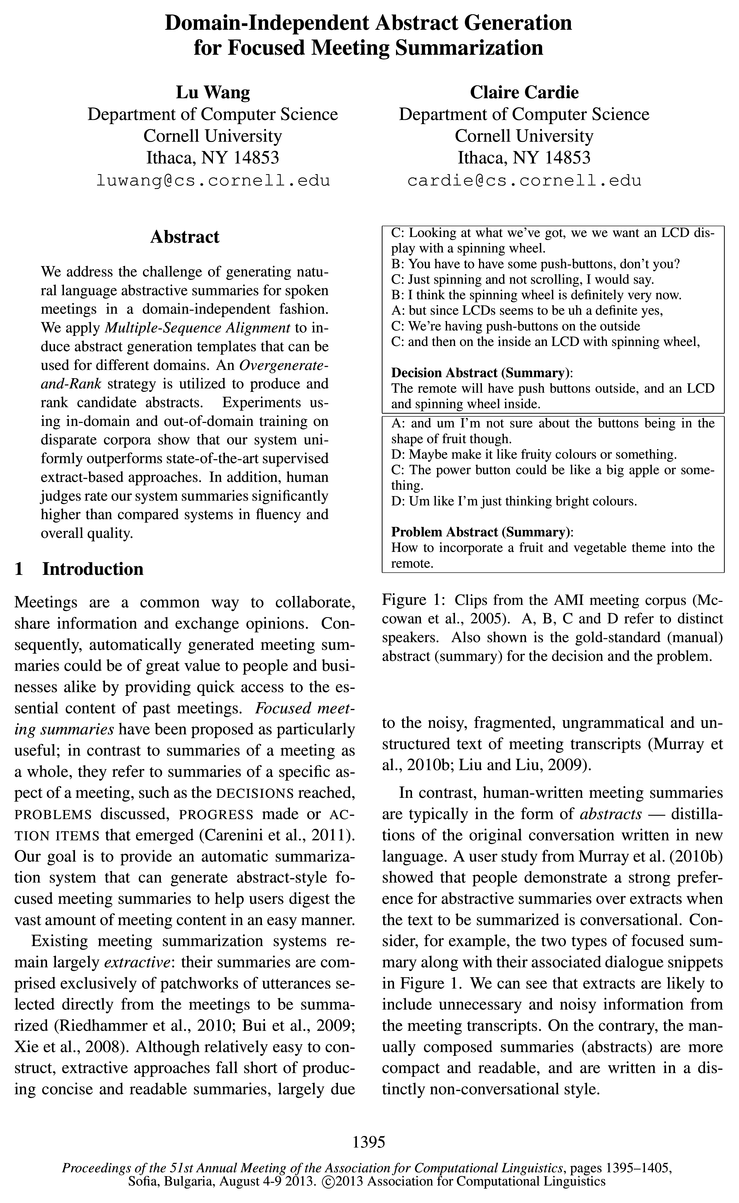

Meetings are a common way to collaborate, share information and exchange opinions.

Related Work

Most research on spoken dialogue summarization attempts to generate summaries for full dialogues (Carenini et al., 2011).

Framework

Our domain-independent abstract generation framework produces a summarizer that generates a grammatical abstract from a cluster of meeting-element-related dialogue acts (DAS) —all utterances associated with a single decision, problem, action item or progress step of interest.

Content Selection

Phrase-based content selection approaches have been shown to support better meeting summaries (Fernandez et al., 2008).

Surface Realization

In this section, we describe surface realization, which renders the relation instances into natural language abstracts.

Experimental Setup

Corpora.

Results

Content Selection Evaluation.

Conclusion

We presented a domain-independent abstract generation framework for focused meeting summarization.

Acknowledgments

This work was supported in part by National Science Foundation Grant IIS-O968450 and a gift from Boeing.

Topics

relation instances

- Given the DA cluster to be summarized, the Content Selection module identifies a set of summary-worthy relation instances represented as indicator-argument pairs (i.e.Page 3, “Framework”

- In the first step, each relation instance is filled into templates with disparate structures that are learned automatically from the training set (Template F ill-ing).Page 3, “Framework”

- A statistical ranker then selects one best abstract per relation instance (Statistical Ranking).Page 3, “Framework”

- Therefore, we chose a content selection representation of a finer granularity than an utterance: we identify relation instances that can both effectively detect the crucial content and incorporate enough syntactic information to facilitate the downstream surface realization.Page 3, “Content Selection”

- More specifically, our relation instances are based on information extraction methods that identify a lexical indicator (or trigger) that evokes a relation of interest and then employ syntactic information, often in conjunction with semantic constraints, to find the argument con-stituent(or target phrase) to be extracted.Page 3, “Content Selection”

- For example, in the DA cluster of Figure 2, (want, an LCD display with a spinning wheel) and (push-buttons, 0n the outside) are two relation instances .Page 4, “Content Selection”

- Relation Instance Extraction We adopt and extend the syntactic constraints from Wang and Cardie (2012) to identify all relation instances in the input utterances; the summary-worthy ones will be selected by a discriminative classifier.Page 4, “Content Selection”

- All relation instances can be categorized as summary-worthy or not, but only the summary-worthy ones are used for abstract generation.Page 4, “Content Selection”

- For training data construction, we consider a relation instance to be a positive example if it shares any content word with its corresponding abstracts, and a negative example otherwise.Page 4, “Content Selection”

- In this section, we describe surface realization, which renders the relation instances into natural language abstracts.Page 4, “Surface Realization”

- Once the templates are learned, the relation instances from Section 4 are filled into the templates to generate an abstract (see Section 5.2).Page 4, “Surface Realization”

See all papers in Proc. ACL 2013 that mention relation instances.

See all papers in Proc. ACL that mention relation instances.

Back to top.

BLEU

- Automatic evaluation (using ROUGE (Lin and Hovy, 2003) and BLEU (Papineni et al., 2002)) against manually generated focused summaries shows that our sum-marizers uniformly and statistically significantly outperform two baseline systems as well as a state-of-the-art supervised extraction-based system.Page 2, “Introduction”

- To evaluate the full abstract generation system, the BLEU score (Papineni et al., 2002) (the precision of uni-grams and bigrams with a breVity penalty) is computed with human abstracts as reference.Page 8, “Results”

- BLEU has a fairly good agreement with human judgement and has been used to evaluate a variety of language generation systems (Angeli et al., 2010; Konstas and Lapata, 2012).Page 8, “Results”

- BLEUPage 8, “Results”

- BLEUPage 8, “Results”

- BLEUPage 8, “Results”

- BLEUPage 8, “Results”

- BLEUPage 8, “Results”

- BLEUPage 8, “Results”

- Figure 5: Full abstract generation system evaluation by using BLEU (multiplied by 100).Page 8, “Results”

- The BLEU scores in Figure 5 show that our system improves the scores consistently over the baselines and the SVM-based approach.Page 8, “Results”

See all papers in Proc. ACL 2013 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

parse tree

- Both the indicator and argument take the form of constituents in the parse tree .Page 4, “Content Selection”

- It takes as input a set of relation instances (from the same cluster) R = {(indi, argi)}i]:1 that are produced by content selection component, a set of templates T = {tj that are represented as parsing trees , a transformation function F (described below), and a statistical ranker S for ranking the generated abstracts, for which we defer description later in this Section.Page 5, “Surface Realization”

- The transformation function F models the constituent-level transformations of relation instances and their mappings to the parse trees of templates.Page 6, “Surface Realization”

- F all-Constitnent Mapping denotes that a source constituent is mapped directly to a target constituent of the template parse tree with the same tag.Page 6, “Surface Realization”

- Moreover, for valid indtkar and argi‘”, the words subsumed by them should be all abstracted in the template, and they do not overlap in the parse tree .Page 6, “Surface Realization”

- To obtain the realized abstract, we traverse the parse tree of the filled template in pre-order.Page 6, “Surface Realization”

See all papers in Proc. ACL 2013 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

in-domain

- The resulting systems yield results comparable to those from the same system trained on in-domain data, and statistically significantly outperform supervised extractive summarization approaches trained on in-domain data.Page 2, “Introduction”

- Table 3 indicates that, with both true clusterings and system clusterings, our system trained on out-of—domain data achieves comparable performance with the same system trained on in-domain data.Page 8, “Results”

- for OUR SYSTEM trained on IN-domain dataPage 8, “Results”

- and OUT-of-domain data, and for the utterance-level extraction system (SVM-DA) trained on in-domain data.Page 9, “Results”

- Judges selected our system as the best system in 62.3% scenarios ( IN-DOMAIN : 35.6%, OUT-OF-DOMAIN: 26.7%).Page 9, “Results”

See all papers in Proc. ACL 2013 that mention in-domain.

See all papers in Proc. ACL that mention in-domain.

Back to top.

clusterings

- In the True Clusterings setting, we use the annotations to create perfect partitions of the DAs for input to the system; in the SystemPage 7, “Experimental Setup”

- Clusterings setting, we employ a hierarchical ag-glomerative clustering algorithm used for this task in (Wang and Cardie, 2011).Page 7, “Experimental Setup”

- Table 3 indicates that, with both true clusterings and system clusterings , our system trained on out-of—domain data achieves comparable performance with the same system trained on in-domain data.Page 8, “Results”

- We randomly select 15 decision and 15 problem DA clusters (true clusterings ).Page 8, “Results”

See all papers in Proc. ACL 2013 that mention clusterings.

See all papers in Proc. ACL that mention clusterings.

Back to top.

content word

- A valid indicator-argument pair should have at least one content word and satisfy one of the following constraints:Page 4, “Content Selection”

- For training data construction, we consider a relation instance to be a positive example if it shares any content word with its corresponding abstracts, and a negative example otherwise.Page 4, “Content Selection”

- number of content words in indicator/argument number of content words that are also in previous DA indicator/argument only contains stopword?Page 4, “Surface Realization”

See all papers in Proc. ACL 2013 that mention content word.

See all papers in Proc. ACL that mention content word.

Back to top.

dependency relation

- dependency relation of indicator and argumentPage 4, “Surface Realization”

- constituent tag of indsrc with constituent tag of indta'" constituent tag of argsm with constituent tag of argt‘" transformation of indsrc/argsm combined with constituent tag dependency relation of indsm and argsrcPage 6, “Surface Realization”

- dependency relation of indta’" and argt‘"Page 6, “Surface Realization”

See all papers in Proc. ACL 2013 that mention dependency relation.

See all papers in Proc. ACL that mention dependency relation.

Back to top.

domain adaptation

- ), we also make initial tries for domain adaptation so that our summarization method does not need human-written abstracts for each new meeting domain (e.g.Page 2, “Introduction”

- Domain Adaptation Evaluation.Page 8, “Results”

- We further examine our system in domain adaptation scenarios for decision and problem summarization, where we train the system on AMI for use on ICSI, and vice versa.Page 8, “Results”

See all papers in Proc. ACL 2013 that mention domain adaptation.

See all papers in Proc. ACL that mention domain adaptation.

Back to top.

I’m

- A: and um I’m not sure about the buttons being in the shape of fruit though.Page 1, “Introduction”

- D: Um like I’m just thinking bright colours.Page 1, “Introduction”

- SVM-DA: and um Im not sure about the buttons being in the shape of fruit though.Page 9, “Conclusion”

See all papers in Proc. ACL 2013 that mention I’m.

See all papers in Proc. ACL that mention I’m.

Back to top.

manual evaluation

- We tune the parameter on a small held-out development set by manually evaluating the induced templates.Page 5, “Surface Realization”

- Note that we do not explicitly evaluate the quality of the learned templates, which would require a significant amount of manual evaluation .Page 5, “Surface Realization”

- formly outperform the state-of—the-art supervised extraction-based systems in both automatic and manual evaluation .Page 9, “Conclusion”

See all papers in Proc. ACL 2013 that mention manual evaluation.

See all papers in Proc. ACL that mention manual evaluation.

Back to top.

natural language

- We address the challenge of generating natural language abstractive summaries for spoken meetings in a domain-independent fashion.Page 1, “Abstract”

- 0 To the best of our knowledge, our system is the first fully automatic system to generate natural language abstracts for spoken meetings.Page 2, “Introduction”

- In this section, we describe surface realization, which renders the relation instances into natural language abstracts.Page 4, “Surface Realization”

See all papers in Proc. ACL 2013 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

objective function

- Input : relation instances R = {(indi,argi>}£f=1, generated abstracts A = {absfijib objective function f , cost function GPage 7, “Surface Realization”

- We employ the following objective function:Page 7, “Surface Realization”

- Algorithm 1 sequentially finds an abstract with the greatest ratio of objective function gain to length, and add it to the summary if the gain is nonnegative.Page 7, “Surface Realization”

See all papers in Proc. ACL 2013 that mention objective function.

See all papers in Proc. ACL that mention objective function.

Back to top.

significantly outperform

- Automatic evaluation (using ROUGE (Lin and Hovy, 2003) and BLEU (Papineni et al., 2002)) against manually generated focused summaries shows that our sum-marizers uniformly and statistically significantly outperform two baseline systems as well as a state-of-the-art supervised extraction-based system.Page 2, “Introduction”

- The resulting systems yield results comparable to those from the same system trained on in-domain data, and statistically significantly outperform supervised extractive summarization approaches trained on in-domain data.Page 2, “Introduction”

- In most experiments, it also significantly outperforms the baselines and the extract-based approaches (p < 0.05).Page 8, “Results”

See all papers in Proc. ACL 2013 that mention significantly outperform.

See all papers in Proc. ACL that mention significantly outperform.

Back to top.

Support Vector

- A discriminative classifier is trained for this purpose based on Support Vector Machines (SVMs) (Joachims, 1998) with an RBF kernel.Page 4, “Content Selection”

- We utilize a discriminative ranker based on Support Vector Regression (SVR) (Smola and Scho'lkopf, 2004) to rank the generated abstracts.Page 6, “Surface Realization”

- We also compare our approach to two supervised extractive summarization methods — Support Vector Machines (J oachims, 1998) trained with the same fea-Page 7, “Experimental Setup”

See all papers in Proc. ACL 2013 that mention Support Vector.

See all papers in Proc. ACL that mention Support Vector.

Back to top.