Article Structure

Introduction

Text summarization beyond extraction requires a semantic representation that abstracts away from words and phrases and from which a summary can be generated (Mani, 2001; Sp'arck-Jones, 2007).

Heuristics-based pattern extraction

In order to be able to work in an Open-IE manner, applicable to different domains, most existing pattern extraction systems are based on linguistically motivated heuristics.

Pattern extraction by sentence compression

Sentence compression is a summarization technique that shortens input sentences preserving the most important content (Grefenstette, 1998; McDonald, 2006; Clarke and Lapata, 2008, inter alia).

Memory-based pattern extraction

Neither heuristics-based, nor compression-based methods provide a guarantee that the extracted pattern is grammatically correct.

Evaluation

5.1 Experimental settings

Conclusions

Most Open-IE systems are based on linguistically-motivated heuristics for learning patterns that express relations between entities or events.

Topics

dependency parse

- Algorithm 1 HEURISTICEXTRACTOR(T, E): heuristically extract relational patterns for the dependency parse T and the set of entities E. 1: /* Global constants /* 2: global Vp, VC,N10,NC 3: Vc <— {subj,nsubj,nsubjpass,dobj,iobj,a:comp, 4: acomp, ewpl, neg, aux, attr, prt} 5: Vp <— {azcomp} 6 7 8 9Page 2, “Introduction”

- (2013), who built well formed relational patterns by extending minimum spanning trees (MST) which connect entity mentions in a dependency parse .Page 3, “Heuristics-based pattern extraction”

- Instead, we chose to modify the method of Filippova and Altun (2013) because it relies on dependency parse trees and does not use any LM scoring.Page 3, “Pattern extraction by sentence compression”

- To this end, we build a trie of dependency trees (which we call a tree-trie) by scanning all the dependency parses in the news trainingPage 4, “Memory-based pattern extraction”

- As a result, we are able to load a trie encoding 400M input dependency parses , 170M distinct nodes and 48M distinct sentence structures in under 10GB of RAM.Page 5, “Memory-based pattern extraction”

- The news have been processed with a to-kenizer, a sentence splitter (Gillick and Favre, 2009), a part-of-speech tagger and dependency parser (Nivre, 2006), a co-reference resolution module (Haghighi and Klein, 2009) and an entity linker based on Wikipedia and Freebase (Milne and Witten, 2008).Page 6, “Evaluation”

See all papers in Proc. ACL 2014 that mention dependency parse.

See all papers in Proc. ACL that mention dependency parse.

Back to top.

Sentence compression

- The first, compression-based method uses a robust sentence compressor with an aggressive compression rate to get to the core of the sentence (Sec.Page 2, “Introduction”

- To the best of our knowledge, this is the first time that this task has been proposed; it can be considered as abstractive sentence compression, in contrast to most existing sentence compression systems which are based on selecting words from the original sentence or rewriting with simpler paraphrase tables.Page 2, “Introduction”

- Sentence compression is a summarization technique that shortens input sentences preserving the most important content (Grefenstette, 1998; McDonald, 2006; Clarke and Lapata, 2008, inter alia).Page 3, “Pattern extraction by sentence compression”

- To our knowledge, this application of sentence compressors is novel.Page 3, “Pattern extraction by sentence compression”

- Sentence compression methods are abundant but very few can be configured to produce output satisfying certain constraints.Page 3, “Pattern extraction by sentence compression”

- In our case, sentence compressors which formulate the compression task as an optimization problem and solve it with integer linear programming (ILP) tools under a number of constraints are particularly attractive (Clarke and Lapata, 2008; Filippova and Altun, 2013).Page 3, “Pattern extraction by sentence compression”

See all papers in Proc. ACL 2014 that mention Sentence compression.

See all papers in Proc. ACL that mention Sentence compression.

Back to top.

dependency tree

- 1) is formulated over weighted edges in a transformed dependency tree and is subject to a number of constraints.Page 3, “Pattern extraction by sentence compression”

- To this end, we build a trie of dependency trees (which we call a tree-trie) by scanning all the dependency parses in the news trainingPage 4, “Memory-based pattern extraction”

- Algorithm 2 STORE(T, I): store the dependency tree T in the tree-trie I. : /* Entry p0int/* L <— T.LINEARIZE() STORERECURSION(I.R00T(), L, 0) return M /* Procedures /* : procedure STORERECURSION(n, L, 0) ifo 2: L.LENGTH() then n.ADDTREESTRUCTURE(L.STRUCTURE()) return 10: if not n.HAsCHILD(L.TOKEN(o)) then 11: n.ADDCHILD(L.TOKEN(o))Page 4, “Memory-based pattern extraction”

- First, each dependency tree (a) is linearized, resulting in a data structure that consists of two aligned sequences (b).Page 4, “Memory-based pattern extraction”

- At lookup time, we want to use the tree-trie to identify all sub- graphs of an input dependency tree T that match at least a complete observed sentence.Page 5, “Memory-based pattern extraction”

See all papers in Proc. ACL 2014 that mention dependency tree.

See all papers in Proc. ACL that mention dependency tree.

Back to top.

news article

- Then, the same extraction method is used to collect patterns from sentences in never-seen-before news articles .Page 2, “Introduction”

- Given the title or first sentence of a news article , run the same pattern extraction method that was used in training and, if possible, obtain a pattern p involving some entities.Page 7, “Evaluation”

- Replace the entity placeholders in the top-scored patterns pj with the entities that were actually mentioned in the input news article .Page 7, “Evaluation”

- Compared to this model, with heuristics we can obtain patterns for more than twice more news articles .Page 8, “Evaluation”

See all papers in Proc. ACL 2014 that mention news article.

See all papers in Proc. ACL that mention news article.

Back to top.

edge weight

- Edge weight is defined as a linear function over a feature set: ’LU(€) = w - f(e).Page 3, “Pattern extraction by sentence compression”

- Since we consider compressions with different lengths as candidates, from this set we select the one with the maximum averaged edge weight as the final compression.Page 4, “Pattern extraction by sentence compression”

- Unlike it, the compression-based method keeps the essential prepositional phrase for divorce in the pattern because the average edge weight is greater for the tree with the prepositional phrase.Page 4, “Pattern extraction by sentence compression”

See all papers in Proc. ACL 2014 that mention edge weight.

See all papers in Proc. ACL that mention edge weight.

Back to top.

entity mentions

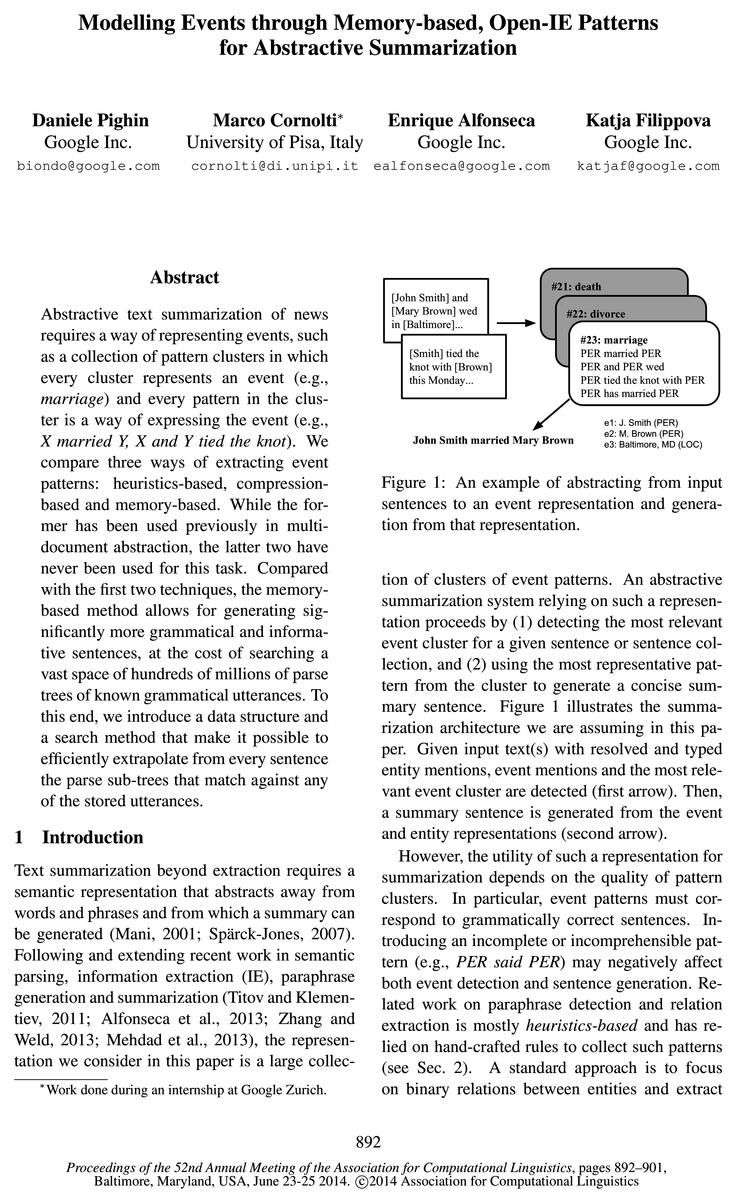

- Given input text(s) with resolved and typed entity mentions , event mentions and the most relevant event cluster are detected (first arrow).Page 1, “Introduction”

- (2013), who built well formed relational patterns by extending minimum spanning trees (MST) which connect entity mentions in a dependency parse.Page 3, “Heuristics-based pattern extraction”

- entity mentions must be retained, .Page 3, “Pattern extraction by sentence compression”

See all papers in Proc. ACL 2014 that mention entity mentions.

See all papers in Proc. ACL that mention entity mentions.

Back to top.

LM

- The method of Clarke and Lapata (2008) uses a trigram language model ( LM ) to score compressions.Page 3, “Pattern extraction by sentence compression”

- Since we are interested in very short outputs, a LM trained on standard, uncompressed text would not be suitable.Page 3, “Pattern extraction by sentence compression”

- Instead, we chose to modify the method of Filippova and Altun (2013) because it relies on dependency parse trees and does not use any LM scoring.Page 3, “Pattern extraction by sentence compression”

See all papers in Proc. ACL 2014 that mention LM.

See all papers in Proc. ACL that mention LM.

Back to top.

parse tree

- The input to the algorithm are a parse tree T and a set of target entities E. We first generate combinations of 1-3 elements of E (line 10), then for each combination 0 we identify all the nodes in T that mention any of the entities in C. We continue by constructing the MST of these nodes, and finally apply our heuristics to the nodes in the MST.Page 3, “Heuristics-based pattern extraction”

- Instead, we chose to modify the method of Filippova and Altun (2013) because it relies on dependency parse trees and does not use any LM scoring.Page 3, “Pattern extraction by sentence compression”

- We highlighted in bold the path corresponding to the linearized form (b) of the example parse tree (a).Page 4, “Memory-based pattern extraction”

See all papers in Proc. ACL 2014 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

Time complexity

- Figure 5: Time complexity of lookup operations for inputs of different sizes.Page 6, “Memory-based pattern extraction”

- Time complexity of lookups.Page 6, “Memory-based pattern extraction”

- We discuss the theoretical time complexity of looking up extraction patterns in a large corpus ofPage 9, “Conclusions”

See all papers in Proc. ACL 2014 that mention Time complexity.

See all papers in Proc. ACL that mention Time complexity.

Back to top.